What is AI?

Internet nastiness, name-calling, and other not-so-petty, world-altering disagreements

AI is sexy, AI is cool. AI is entrenching inequality, upending the job market, and wrecking education. AI is a theme-park ride, AI is a magic trick. AI is our final invention, AI is a moral obligation. AI is the buzzword of the decade, AI is marketing jargon from 1955. AI is humanlike, AI is alien. AI is super-smart and as dumb as dirt. The AI boom will boost the economy, the AI bubble is about to burst. AI will increase abundance and empower humanity to maximally flourish in the universe. AI will kill us all.

What the hell is everybody talking about?

Artificial intelligence is the hottest technology of our time. But what is it? It sounds like a stupid question, but it’s one that’s never been more urgent. Here’s the short answer: AI is a catchall term for a set of technologies that make computers do things that are thought to require intelligence when done by people. Think of recognizing faces, understanding speech, driving cars, writing sentences, answering questions, creating pictures. But even that definition contains multitudes.

And that right there is the problem. What does it mean for machines to understand speech or write a sentence? What kinds of tasks could we ask such machines to do? And how much should we trust the machines to do them?

As this technology moves from prototype to product faster and faster, these have become questions for all of us. But (spoilers!) I don’t have the answers. I can’t even tell you what AI is. The people making it don’t know what AI is either. Not really. “These are the kinds of questions that are important enough that everyone feels like they can have an opinion,” says Chris Olah, chief scientist at the San Francisco–based AI lab Anthropic. “I also think you can argue about this as much as you want and there’s no evidence that’s going to contradict you right now.”

But if you’re willing to buckle up and come for a ride, I can tell you why nobody really knows, why everybody seems to disagree, and why you’re right to care about it.

Let’s start with an offhand joke.

Back in 2022, partway through the first episode of Mystery AI Hype Theater 3000, a party-pooping podcast in which the irascible cohosts Alex Hanna and Emily Bender have a lot of fun sticking “the sharpest needles’’ into some of Silicon Valley’s most inflated sacred cows, they make a ridiculous suggestion. They’re hate-reading aloud from a 12,500-word Medium post by a Google VP of engineering, Blaise Agüera y Arcas, titled “Can machines learn how to behave?” Agüera y Arcas makes a case that AI can understand concepts in a way that’s somehow analogous to the way humans understand concepts—concepts such as moral values. In short, perhaps machines can be taught to behave.

Hanna and Bender are having none of it. They decide to replace the term “AI’’ with “mathy math”—you know, just lots and lots of math.

The irreverent phrase is meant to collapse what they see as bombast and anthropomorphism in the sentences being quoted. Pretty soon Hanna, a sociologist and director of research at the Distributed AI Research Institute, and Bender, a computational linguist at the University of Washington (and internet-famous critic of tech industry hype), open a gulf between what Agüera y Arcas wants to say and how they choose to hear it.

“How should AIs, their creators, and their users be held morally accountable?” asks Agüera y Arcas.

How should mathy math be held morally accountable? asks Bender.

“There’s a category error here,” she says. Hanna and Bender don’t just reject what Agüera y Arcas says; they claim it makes no sense. “Can we please stop it with the ‘an AI’ or ‘the AIs’ as if they are, like, individuals in the world?” Bender says.

It might sound as if they’re talking about different things, but they’re not. Both sides are talking about large language models, the technology behind the current AI boom. It’s just that the way we talk about AI is more polarized than ever. In May, OpenAI CEO Sam Altman teased the latest update to GPT-4, his company’s flagship model, by tweeting, “Feels like magic to me.”

There’s a lot of road between math and magic.

AI has acolytes, with a faith-like belief in the technology’s current power and inevitable future improvement. Artificial general intelligence is in sight, they say; superintelligence is coming behind it. And it has heretics, who pooh-pooh such claims as mystical mumbo-jumbo.

The buzzy popular narrative is shaped by a pantheon of big-name players, from Big Tech marketers in chief like Sundar Pichai and Satya Nadella to edgelords of industry like Elon Musk and Altman to celebrity computer scientists like Geoffrey Hinton. Sometimes these boosters and doomers are one and the same, telling us that the technology is so good it’s bad.

As AI hype has ballooned, a vocal anti-hype lobby has risen in opposition, ready to smack down its ambitious, often wild claims. Pulling in this direction are a raft of researchers, including Hanna and Bender, and also outspoken industry critics like influential computer scientist and former Googler Timnit Gebru and NYU cognitive scientist Gary Marcus. All have a chorus of followers bickering in their replies.

In short, AI has come to mean all things to all people, splitting the field into fandoms. It can feel as if different camps are talking past one another, not always in good faith.

Maybe you find all this silly or tiresome. But given the power and complexity of these technologies—which are already used to determine how much we pay for insurance, how we look up information, how we do our jobs, etc. etc. etc.—it’s about time we at least agreed on what it is we’re even talking about.

Yet in all the conversations I’ve had with people at the cutting edge of this technology, no one has given a straight answer about exactly what it is they’re building. (A quick side note: This piece focuses on the AI debate in the US and Europe, largely because many of the best-funded, most cutting-edge AI labs are there. But of course there’s important research happening elsewhere, too, in countries with their own varying perspectives on AI, particularly China.) Partly, it’s the pace of development. But the science is also wide open. Today’s large language models can do amazing things. The field just can’t find common ground on what’s really going on under the hood.

These models are trained to complete sentences. They appear to be able to do a lot more—from solving high school math problems to writing computer code to passing law exams to composing poems. When a person does these things, we take it as a sign of intelligence. What about when a computer does it? Is the appearance of intelligence enough?

These questions go to the heart of what we mean by “artificial intelligence,” a term people have actually been arguing about for decades. But the discourse around AI has become more acrimonious with the rise of large language models that can mimic the way we talk and write with thrilling/chilling (delete as applicable) realism.

We have built machines with humanlike behavior but haven’t shrugged off the habit of imagining a humanlike mind behind them. This leads to over-egged evaluations of what AI can do; it hardens gut reactions into dogmatic positions, and it plays into the wider culture wars between techno-optimists and techno-skeptics.

Add to this stew of uncertainty a truckload of cultural baggage, from the science fiction that I’d bet many in the industry were raised on, to far more malign ideologies that influence the way we think about the future. Given this heady mix, arguments about AI are no longer simply academic (and perhaps never were). AI inflames people’s passions and makes grownups call each other names.

“It’s not in an intellectually healthy place right now,” Marcus says of the debate. For years Marcus has pointed out the flaws and limitations of deep learning, the tech that launched AI into the mainstream, powering everything from LLMs to image recognition to self-driving cars. His 2001 book The Algebraic Mind argued that neural networks, the foundation on which deep learning is built, are incapable of reasoning by themselves. (We’ll skip over it for now, but I’ll come back to it later and we’ll see just how much a word like “reasoning” matters in a sentence like this.)

Marcus says that he has tried to engage Hinton—who last year went public with existential fears about the technology he helped invent—in a proper debate about how good large language models really are. “He just won’t do it,” says Marcus. “He calls me a twit.” (Having talked to Hinton about Marcus in the past, I can confirm that. “ChatGPT clearly understands neural networks better than he does,” Hinton told me last year.) Marcus also drew ire when he wrote an essay titled “Deep learning is hitting a wall.” Altman responded to it with a tweet: “Give me the confidence of a mediocre deep learning skeptic.”

At the same time, banging his drum has made Marcus a one-man brand and earned him an invitation to sit next to Altman and give testimony last year before the US Senate’s AI oversight committee.

And that’s why all these fights matter more than your average internet nastiness. Sure, there are big egos and vast sums of money at stake. But more than that, these disputes matter when industry leaders and opinionated scientists are summoned by heads of state and lawmakers to explain what this technology is and what it can do (and how scared we should be). They matter when this technology is being built into software we use every day, from search engines to word-processing apps to assistants on your phone. AI is not going away. But if we don’t know what we’re being sold, who’s the dupe?

“It is hard to think of another technology in history about which such a debate could be had—a debate about whether it is everywhere, or nowhere at all,” Stephen Cave and Kanta Dihal write in Imagining AI, a 2023 collection of essays about how different cultural beliefs shape people’s views of artificial intelligence. “That it can be held about AI is a testament to its mythic quality.”

Above all else, AI is an idea—an ideal—shaped by worldviews and sci-fi tropes as much as by math and computer science. Figuring out what we are talking about when we talk about AI will clarify many things. We won’t agree on them, but common ground on what AI is would be a great place to start talking about what AI should be.

What is everyone really fighting about, anyway?

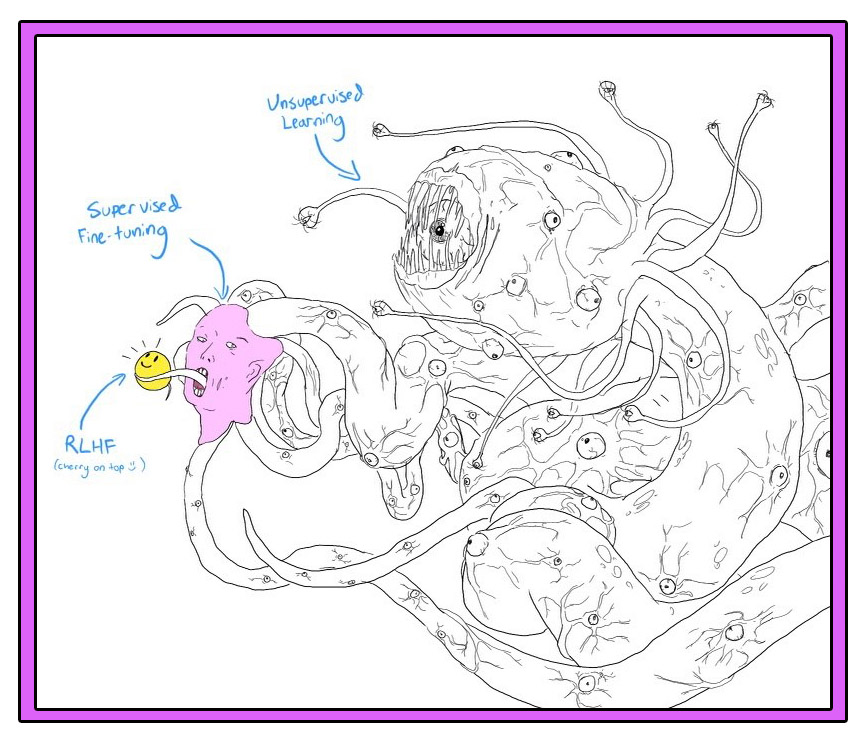

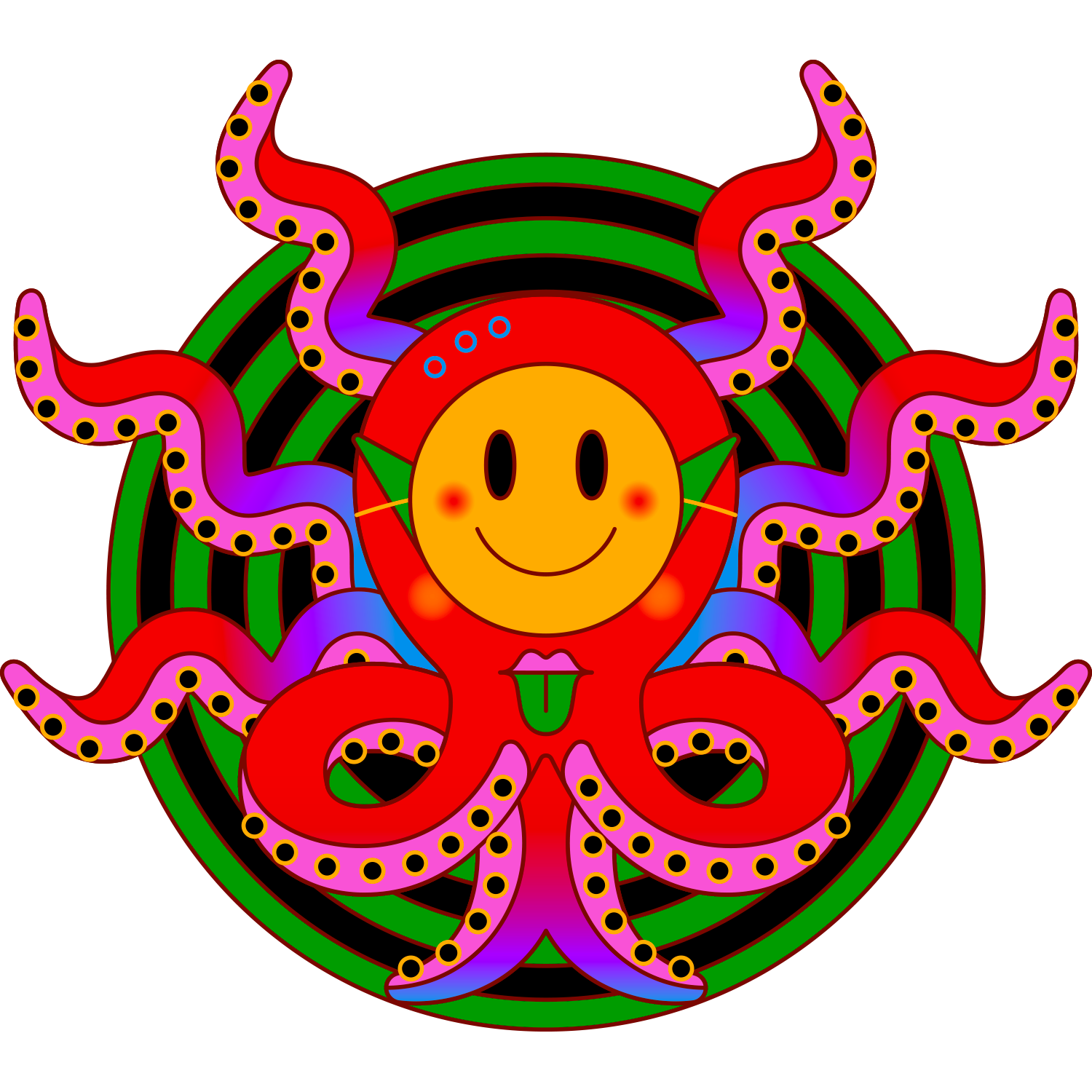

In late 2022, soon after OpenAI released ChatGPT, a new meme started circulating online that captured the weirdness of this technology better than anything else. In most versions, a Lovecraftian monster called the Shoggoth, all tentacles and eyeballs, holds up a bland smiley-face emoji as if to disguise its true nature. ChatGPT presents as humanlike and accessible in its conversational wordplay, but behind that façade lie unfathomable complexities—and horrors. (“It was a terrible, indescribable thing vaster than any subway train—a shapeless congeries of protoplasmic bubbles,” H.P. Lovecraft wrote of the Shoggoth in his 1936 novella At the Mountains of Madness.)

For years one of the best-known touchstones for AI in pop culture was The Terminator, says Dihal. But by putting ChatGPT online for free, OpenAI gave millions of people firsthand experience of something different. “AI has always been a sort of really vague concept that can expand endlessly to encompass all kinds of ideas,” she says. But ChatGPT made those ideas tangible: “Suddenly, everybody has a concrete thing to refer to.” What is AI? For millions of people the answer was now: ChatGPT.

The AI industry is selling that smiley face hard. Consider how The Daily Show recently skewered the hype, as expressed by industry leaders. Silicon Valley’s VC in chief, Marc Andreessen: “This has the potential to make life much better … I think it’s honestly a layup.” Altman: “I hate to sound like a utopic tech bro here, but the increase in quality of life that AI can deliver is extraordinary.” Pichai: “AI is the most profound technology that humanity is working on. More profound than fire.”

Jon Stewart: “Yeah, suck a dick, fire!”

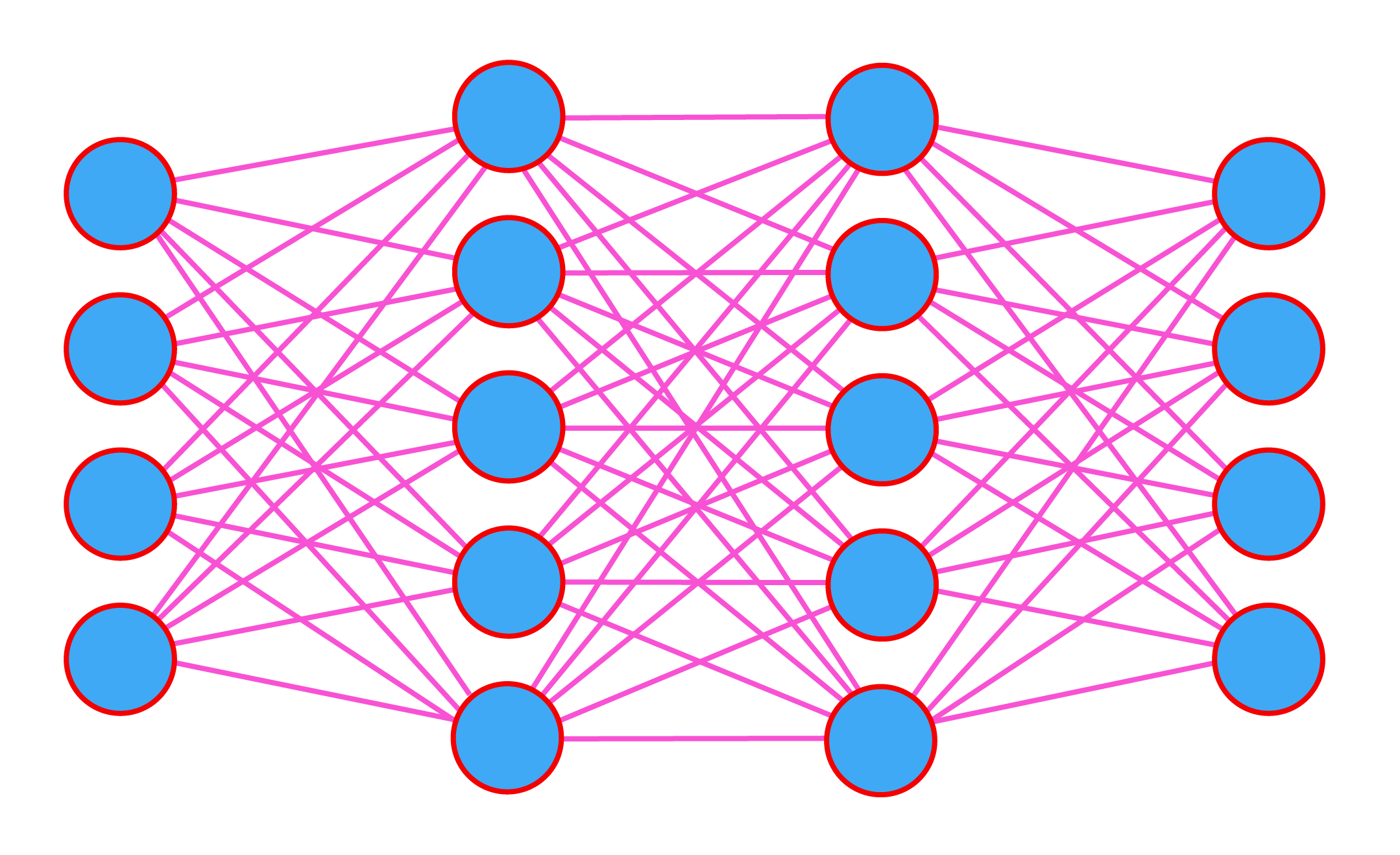

But as the meme points out, ChatGPT is a friendly mask. Behind it is a monster called GPT-4, a large language model built from a vast neural network that has ingested more words than most of us could read in a thousand lifetimes. During training, which can last months and cost tens of millions of dollars, such models are given the task of filling in blanks in sentences taken from millions of books and a significant fraction of the internet. They do this task over and over again. In a sense, they are trained to be supercharged autocomplete machines. The result is a model that has turned much of the world’s written information into a statistical representation of which words are most likely to follow other words, captured across billions and billions of numerical values.

It’s math—a hell of a lot of math. Nobody disputes that. But is it just that, or does this complex math encode algorithms capable of something akin to human reasoning or the formation of concepts?

Many of the people who answer yes to that question believe we’re close to unlocking something called artificial general intelligence, or AGI, a hypothetical future technology that can do a wide range of tasks as well as humans can. A few of them have even set their sights on what they call superintelligence, sci-fi technology that can do things far better than humans. This cohort believes AGI will drastically change the world—but to what end? That’s yet another point of tension. It could fix all the world’s problems—or bring about its doom.

Today AGI appears in the mission statements of the world’s top AI labs. But the term was invented in 2007 as a niche attempt to inject some pizzazz into a field that was then best known for applications that read handwriting on bank deposit slips or recommended your next book to buy. The idea was to reclaim the original vision of an artificial intelligence that could do humanlike things (more on that soon).

It was really an aspiration more than anything else, Google DeepMind cofounder Shane Legg, who coined the term, told me last year: “I didn’t have an especially clear definition.”

AGI became the most controversial idea in AI. Some talked it up as the next big thing: AGI was AI but, you know, much better. Others claimed the term was so vague that it was meaningless.

“AGI used to be a dirty word,” Ilya Sutskever told me, before he resigned as chief scientist at OpenAI.

But large language models, and ChatGPT in particular, changed everything. AGI went from dirty word to marketing dream.

Which brings us to what I think is one of the most illustrative disputes of the moment—one that sets up the sides of the argument and the stakes in play.

Seeing magic in the machine

A few months before the public launch of OpenAI’s large language model GPT-4 in March 2023, the company shared a prerelease version with Microsoft, which wanted to use the new model to revamp its search engine Bing.

At the time, Sebastian Bubeck was studying the limitations of LLMs and was somewhat skeptical of their abilities. In particular, Bubeck—the vice president of generative AI research at Microsoft Research in Redmond, Washington—had been trying and failing to get the technology to solve middle school math problems. Things like: x – y = 0; what are x and y? “My belief was that reasoning was a bottleneck, an obstacle,” he says. “I thought that you would have to do something really fundamentally different to get over that obstacle.”

Then he got his hands on GPT-4. The first thing he did was try those math problems. “The model nailed it,” he says. “Sitting here in 2024, of course GPT-4 can solve linear equations. But back then, this was crazy. GPT-3 cannot do that.”

But Bubeck’s real road-to-Damascus moment came when he pushed it to do something new.

The thing about middle school math problems is that they are all over the internet, and GPT-4 may simply have memorized them. “How do you study a model that may have seen everything that human beings have written?” asks Bubeck. His answer was to test GPT-4 on a range of problems that he and his colleagues believed to be novel.

Playing around with Ronen Eldan, a mathematician at Microsoft Research, Bubeck asked GPT-4 to give, in verse, a mathematical proof that there are an infinite number of primes.

Here’s a snippet of GPT-4’s response: “If we take the smallest number in S that is not in P / And call it p, we can add it to our set, don’t you see? / But this process can be repeated indefinitely. / Thus, our set P must also be infinite, you’ll agree.”

Cute, right? But Bubeck and Eldan thought it was much more than that. “We were in this office,” says Bubeck, waving at the room behind him via Zoom. “Both of us fell from our chairs. We couldn’t believe what we were seeing. It was just so creative and so, like, you know, different.”

The Microsoft team also got GPT-4 to generate the code to add a horn to a cartoon picture of a unicorn drawn in Latex, a word processing program. Bubeck thinks this shows that the model could read the existing Latex code, understand what it depicted, and identify where the horn should go.

“There are many examples, but a few of them are smoking guns of reasoning,” he says—reasoning being a crucial building block of human intelligence.

Bubeck, Eldan, and a team of other Microsoft researchers described their findings in a paper that they called “Sparks of artificial general intelligence”: “We believe that GPT-4’s intelligence signals a true paradigm shift in the field of computer science and beyond.” When Bubeck shared the paper online, he tweeted: “time to face it, the sparks of #AGI have been ignited.”

The Sparks paper quickly became infamous—and a touchstone for AI boosters. Agüera y Arcas and Peter Norvig, a former director of research at Google and coauthor of Artificial Intelligence: A Modern Approach, perhaps the most popular AI textbook in the world, cowrote an article called “Artificial General Intelligence Is Already Here.” Published in Noema, a magazine backed by an LA think tank called the Berggruen Institute, their argument uses the Sparks paper as a jumping-off point: “Artificial General Intelligence (AGI) means many different things to different people, but the most important parts of it have already been achieved by the current generation of advanced AI large language models,” they wrote. “Decades from now, they will be recognized as the first true examples of AGI.”

Since then, the hype has continued to balloon. Leopold Aschenbrenner, who at the time was a researcher at OpenAI focusing on superintelligence, told me last year: “AI progress in the last few years has been just extraordinarily rapid. We’ve been crushing all the benchmarks, and that progress is continuing unabated. But it won’t stop there. We’re going to have superhuman models, models that are much smarter than us.” (He was fired from OpenAI in April because, he claims, he raised security concerns about the tech he was building and “ruffled some feathers.” He has since set up a Silicon Valley investment fund.)

In June, Aschenbrenner put out a 165-page manifesto arguing that AI will outpace college graduates by “2025/2026” and that “we will have superintelligence, in the true sense of the word” by the end of the decade. But others in the industry scoff at such claims. When Aschenbrenner tweeted a chart to show how fast he thought AI would continue to improve given how fast it had improved in last few years, the tech investor Christian Keil replied that by the same logic, his baby son, who had doubled in size since he was born, would weigh 7.5 trillion tons by the time he was 10.

It’s no surprise that “sparks of AGI” has also become a byword for over-the-top buzz. “I think they got carried away,” says Marcus, speaking about the Microsoft team. “They got excited, like ‘Hey, we found something! This is amazing!’ They didn’t vet it with the scientific community.” Bender refers to the Sparks paper as a “fan fiction novella.”

Not only was it provocative to claim that GPT-4’s behavior showed signs of AGI, but Microsoft, which uses GPT-4 in its own products, has a clear interest in promoting the capabilities of the technology. “This document is marketing fluff masquerading as research,” one tech COO posted on LinkedIn.

Some also felt the paper’s methodology was flawed. Its evidence is hard to verify because it comes from interactions with a version of GPT-4 that was not made available outside OpenAI and Microsoft. The public version has guardrails that restrict the model’s capabilities, admits Bubeck. This made it impossible for other researchers to re-create his experiments.

One group tried to re-create the unicorn example with a coding language called Processing, which GPT-4 can also use to generate images. They found that the public version of GPT-4 could produce a passable unicorn but not flip or rotate that image by 90 degrees. It may seem like a small difference, but such things really matter when you’re claiming that the ability to draw a unicorn is a sign of AGI.

The key thing about the examples in the Sparks paper, including the unicorn, is that Bubeck and his colleagues believe they are genuine examples of creative reasoning. This means the team had to be certain that examples of these tasks, or ones very like them, were not included anywhere in the vast data sets that OpenAI amassed to train its model. Otherwise, the results could be interpreted instead as instances where GPT-4 reproduced patterns it had already seen.

Bubeck insists that they set the model only tasks that would not be found on the internet. Drawing a cartoon unicorn in Latex was surely one such task. But the internet is a big place. Other researchers soon pointed out that there are indeed online forums dedicated to drawing animals in Latex. “Just fyi we knew about this,” Bubeck replied on X. “Every single query of the Sparks paper was thoroughly looked for on the internet.”

(This didn’t stop the name-calling: “I’m asking you to stop being a charlatan,” Ben Recht, a computer scientist at the University of California, Berkeley, tweeted back before accusing Bubeck of “being caught flat-out lying.”)

Bubeck insists the work was done in good faith, but he and his coauthors admit in the paper itself that their approach was not rigorous—notebook observations rather than foolproof experiments.

Still, he has no regrets: “The paper has been out for more than a year and I have yet to see anyone give me a convincing argument that the unicorn, for example, is not a real example of reasoning.”

That’s not to say he can give me a straight answer to the big question—though his response reveals what kind of answer he’d like to give. “What is AI?” Bubeck repeats back to me. “I want to be clear with you. The question can be simple, but the answer can be complex.”

“There are many simple questions out there to which we still don’t know the answer. And some of those simple questions are the most profound ones,” he says. “I’m putting this on the same footing as, you know, What is the origin of life? What is the origin of the universe? Where did we come from? Big, big questions like this.”

Seeing only math in the machine

Before Bender became one of the chief antagonists of AI’s boosters, she made her mark on the AI world as a coauthor on two influential papers. (Both peer-reviewed, she likes to point out—unlike the Sparks paper and many of the others that get much of the attention.) The first, written with Alexander Koller, a fellow computational linguist at Saarland University in Germany, and published in 2020, was called “Climbing towards NLU” (NLU is natural-language understanding).

“The start of all this for me was arguing with other people in computational linguistics whether or not language models understand anything,” she says. (Understanding, like reasoning, is typically taken to be a basic ingredient of human intelligence.)

Bender and Koller argue that a model trained exclusively on text will only ever learn the form of a language, not its meaning. Meaning, they argue, consists of two parts: the words (which could be marks or sounds) plus the reason those words were uttered. People use language for many reasons, such as sharing information, telling jokes, flirting, warning somebody to back off, and so on. Stripped of that context, the text used to train LLMs like GPT-4 lets them mimic the patterns of language well enough for many sentences generated by the LLM to look exactly like sentences written by a human. But there’s no meaning behind them, no spark. It’s a remarkable statistical trick, but completely mindless.

They illustrate their point with a thought experiment. Imagine two English-speaking people stranded on neighboring deserted islands. There is an underwater cable that lets them send text messages to each other. Now imagine that an octopus, which knows nothing about English but is a whiz at statistical pattern matching, wraps its suckers around the cable and starts listening in to the messages. The octopus gets really good at guessing what words follow other words. So good that when it breaks the cable and starts replying to messages from one of the islanders, she believes that she is still chatting with her neighbor. (In case you missed it, the octopus in this story is a chatbot.)

The person talking to the octopus would stay fooled for a reasonable amount of time, but could that last? Does the octopus understand what comes down the wire?

Imagine that the islander now says she has built a coconut catapult and asks the octopus to build one too and tell her what it thinks. The octopus cannot do this. Without knowing what the words in the messages refer to in the world, it cannot follow the islander’s instructions. Perhaps it guesses a reply: “Okay, cool idea!” The islander will probably take this to mean that the person she is speaking to understands her message. But if so, she is seeing meaning where there is none. Finally, imagine that the islander gets attacked by a bear and sends calls for help down the line. What is the octopus to do with these words?

Bender and Koller believe that this is how large language models learn and why they are limited. “The thought experiment shows why this path is not going to lead us to a machine that understands anything,” says Bender. “The deal with the octopus is that we have given it its training data, the conversations between those two people, and that’s it. But then here’s something that comes out of the blue and it won’t be able to deal with it because it hasn’t understood.”

The other paper Bender is known for, “On the Dangers of Stochastic Parrots,” highlights a series of harms that she and her coauthors believe the companies making large language models are ignoring. These include the huge computational costs of making the models and their environmental impact; the racist, sexist, and other abusive language the models entrench; and the dangers of building a system that could fool people by “haphazardly stitching together sequences of linguistic forms … according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.”

Google senior management wasn’t happy with the paper, and the resulting conflict led two of Bender’s coauthors, Timnit Gebru and Margaret Mitchell, to be forced out of the company, where they had led the AI Ethics team. It also made “stochastic parrot” a popular put-down for large language models—and landed Bender right in the middle of the name-calling merry-go-round.

The bottom line for Bender and for many like-minded researchers is that the field has been taken in by smoke and mirrors: “I think that they are led to imagine autonomous thinking entities that can make decisions for themselves and ultimately be the kind of thing that could actually be accountable for those decisions.”

Always the linguist, Bender is now at the point where she won’t even use the term AI “without scare quotes,” she tells me. Ultimately, for her, it’s a Big Tech buzzword that distracts from the many associated harms. “I’ve got skin in the game now,” she says. “I care about these issues, and the hype is getting in the way.”

Extraordinary evidence?

Agüera y Arcas calls people like Bender “AI denialists”—the implication being that they won’t ever accept what he takes for granted. Bender’s position is that extraordinary claims require extraordinary evidence, which we do not have.

But there are people looking for it, and until they find something clear-cut—sparks or stochastic parrots or something in between—they’d prefer to sit out the fight. Call this the wait-and-see camp.

As Ellie Pavlick, who studies neural networks at Brown University, tells me: “It’s offensive to some people to suggest that human intelligence could be re-created through these kinds of mechanisms.”

She adds, “People have strong-held beliefs about this issue—it almost feels religious. On the other hand, there’s people who have a little bit of a God complex. So it’s also offensive to them to suggest that they just can’t do it.”

Pavlick is ultimately agnostic. She’s a scientist, she insists, and will follow wherever the science leads. She rolls her eyes at the wilder claims, but she believes there’s something exciting going on. “That’s where I would disagree with Bender and Koller,” she tells me. “I think there’s actually some sparks—maybe not of AGI, but like, there’s some things in there that we didn’t expect to find.”

The problem is finding agreement on what those exciting things are and why they’re exciting. With so much hype, it’s easy to be cynical.

Researchers like Bubeck seem a lot more cool-headed when you hear them out. He thinks the infighting misses the nuance in his work. “I don’t see any problem in holding simultaneous views,” he says. “There is stochastic parroting; there is reasoning—it’s a spectrum. It’s very complex. We don’t have all the answers.”

“We need a completely new vocabulary to describe what’s going on,” he says. “One reason why people push back when I talk about reasoning in large language models is because it’s not the same reasoning as in human beings. But I think there is no way we can not call it reasoning. It is reasoning.”

Anthropic’s Olah plays it safe when pushed on what we’re seeing in LLMs, though his company, one of the hottest AI labs in the world right now, built Claude 3, an LLM that has received just as much hyperbolic praise as GPT-4 (if not more) since its release earlier this year.

“I feel like a lot of these conversations about the capabilities of these models are very tribal,” he says. “People have preexisting opinions, and it’s not very informed by evidence on any side. Then it just becomes kind of vibes-based, and I think vibes-based arguments on the internet tend to go in a bad direction.”

Olah tells me he has hunches of his own. “My subjective impression is that these things are tracking pretty sophisticated ideas,” he says. “We don’t have a comprehensive story of how very large models work, but I think it’s hard to reconcile what we’re seeing with the extreme ‘stochastic parrots’ picture.”

That’s as far as he’ll go: “I don’t want to go too much beyond what can be really strongly inferred from the evidence that we have.”

Last month, Anthropic released results from a study in which researchers gave Claude 3 the neural network equivalent of an MRI. By monitoring which bits of the model turned on and off as they ran it, they identified specific patterns of neurons that activated when the model was shown specific inputs.

Anthropic also reported patterns that it says correlate with inputs that attempt to describe or show abstract concepts. “We see features related to deception and honesty, to sycophancy, to security vulnerabilities, to bias,” says Olah. “We find features related to power seeking and manipulation and betrayal.”

These results give one of the clearest looks yet at what’s inside a large language model. It’s a tantalizing glimpse at what look like elusive humanlike traits. But what does it really tell us? As Olah admits, they do not know what the model does with these patterns. “It’s a relatively limited picture, and the analysis is pretty hard,” he says.

Even if Olah won’t spell out exactly what he thinks goes on inside a large language model like Claude 3, it’s clear why the question matters to him. Anthropic is known for its work on AI safety—making sure that powerful future models will behave in ways we want them to and not in ways we don’t (known as “alignment” in industry jargon). Figuring out how today’s models work is not only a necessary first step if you want to control future ones; it also tells you how much you need to worry about doomer scenarios in the first place. “If you don’t think that models are going to be very capable,” says Olah, “then they’re probably not going to be very dangerous.”

Why we all can’t get along

In a 2014 interview with the BBC that looked back on her career, the influential cognitive scientist Margaret Boden, now 87, was asked if she thought there were any limits that would prevent computers (or “tin cans,” as she called them) from doing what humans can do.

“I certainly don’t think there’s anything in principle,” she said. “Because to deny that is to say that [human thinking] happens by magic, and I don’t believe that it happens by magic.”

But, she cautioned, powerful computers won’t be enough to get us there: the AI field will also need “powerful ideas”—new theories of how thinking happens, new algorithms that might reproduce it. “But these things are very, very difficult and I see no reason to assume that we will one of these days be able to answer all of those questions. Maybe we will; maybe we won’t.”

Boden was reflecting on the early days of the current boom, but this will-we-or-won’t-we teetering speaks to decades in which she and her peers grappled with the same hard questions that researchers struggle with today. AI began as an ambitious aspiration 70-odd years ago and we are still disagreeing about what is and isn’t achievable, and how we’ll even know if we have achieved it. Most—if not all—of these disputes come down to this: We don’t have a good grasp on what intelligence is or how to recognize it. The field is full of hunches, but no one can say for sure.

We’ve been stuck on this point ever since people started taking the idea of AI seriously. Or even before that, when the stories we consumed started planting the idea of humanlike machines deep in our collective imagination. The long history of these disputes means that today’s fights often reinforce rifts that have been around since the beginning, making it even more difficult for people to find common ground.

To understand how we got here, we need to understand where we’ve been. So let’s dive into AI’s origin story—one that also played up the hype in a bid for cash.

A brief history of AI spin

The computer scientist John McCarthy is credited with coming up with the term “artificial intelligence” in 1955 when writing a funding application for a summer research program at Dartmouth College in New Hampshire.

The plan was for McCarthy and a small group of fellow researchers, a who’s-who of postwar US mathematicians and computer scientists—or “John McCarthy and the boys,” as Harry Law, a researcher who studies the history of AI at the University of Cambridge and ethics and policy at Google DeepMind, puts it—to get together for two months (not a typo) and make some serious headway on this new research challenge they’d set themselves.

“The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it,” McCarthy and his coauthors wrote. “An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.”

That list of things they wanted to make machines do—what Bender calls “the starry-eyed dream”—hasn’t changed much. Using language, forming concepts, and solving problems are defining goals for AI today. The hubris hasn’t changed much either: “We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer,” they wrote. That summer, of course, has stretched to seven decades. And the extent to which these problems are in fact now solved is something that people still shout about on the internet.

But what’s often left out of this canonical history is that artificial intelligence almost wasn’t called “artificial intelligence” at all.

More than one of McCarthy’s colleagues hated the term he had come up with. “The word ‘artificial’ makes you think there’s something kind of phony about this,” Arthur Samuel, a Dartmouth participant and creator of the first checkers-playing computer, is quoted as saying in historian Pamela McCorduck’s 2004 book Machines Who Think. The mathematician Claude Shannon, a coauthor of the Dartmouth proposal who is sometimes billed as “the father of the information age,” preferred the term “automata studies.” Herbert Simon and Allen Newell, two other AI pioneers, continued to call their own work “complex information processing” for years afterwards.

In fact, “artificial intelligence” was just one of several labels that might have captured the hodgepodge of ideas that the Dartmouth group was drawing on. The historian Jonnie Penn has identified possible alternatives that were in play at the time, including “engineering psychology,” “applied epistemology,” “neural cybernetics,” “non-numerical computing,” “neuraldynamics,” “advanced automatic programming,” and “hypothetical automata.” This list of names reveals how diverse the inspiration for their new field was, pulling from biology, neuroscience, statistics, and more. Marvin Minsky, another Dartmouth participant, has described AI as a “suitcase word” because it can hold so many divergent interpretations.

But McCarthy wanted a name that captured the ambitious scope of his vision. Calling this new field “artificial intelligence” grabbed people’s attention—and money. Don’t forget: AI is sexy, AI is cool.

In addition to terminology, the Dartmouth proposal codified a split between rival approaches to artificial intelligence that has divided the field ever since—a divide Law calls the “core tension in AI.”

McCarthy and his colleagues wanted to describe in computer code “every aspect of learning or any other feature of intelligence” so that machines could mimic them. In other words, if they could just figure out how thinking worked—the rules of reasoning—and write down the recipe, they could program computers to follow it. This laid the foundation of what came to be known as rule-based or symbolic AI (sometimes referred to now as GOFAI, “good old-fashioned AI”). But coming up with hard-coded rules that captured the processes of problem-solving for actual, nontrivial problems proved too hard.

The other path favored neural networks, computer programs that would try to learn those rules by themselves in the form of statistical patterns. The Dartmouth proposal mentions it almost as an aside (referring variously to “neuron nets” and “nerve nets”). Though the idea seemed less promising at first, some researchers nevertheless continued to work on versions of neural networks alongside symbolic AI. But it would take decades—plus vast amounts of computing power and much of the data on the internet—before they really took off. Fast-forward to today and this approach underpins the entire AI boom.

The big takeaway here is that, just like today’s researchers, AI’s innovators fought about foundational concepts and got caught up in their own promotional spin. Even team GOFAI was plagued by squabbles. Aaron Sloman, a philosopher and fellow AI pioneer now in his late 80s, recalls how “old friends” Minsky and McCarthy “disagreed strongly” when he got to know them in the ’70s: “Minsky thought McCarthy’s claims about logic could not work, and McCarthy thought Minsky’s mechanisms could not do what could be done using logic. I got on well with both of them, but I was saying, ‘Neither of you have got it right.’” (Sloman still thinks no one can account for the way human reasoning uses intuition as much as logic, but that’s yet another tangent!)

As the fortunes of the technology waxed and waned, the term “AI” went in and out of fashion. In the early ’70s, both research tracks were effectively put on ice after the UK government published a report arguing that the AI dream had gone nowhere and wasn’t worth funding. All that hype, effectively, had led to nothing. Research projects were shuttered, and computer scientists scrubbed the words “artificial intelligence” from their grant proposals.

When I was finishing a computer science PhD in 2008, only one person in the department was working on neural networks. Bender has a similar recollection: “When I was in college, a running joke was that AI is anything that we haven’t figured out how to do with computers yet. Like, as soon as you figure out how to do it, it wasn’t magic anymore, so it wasn’t AI.”

But that magic—the grand vision laid out in the Dartmouth proposal—remained alive and, as we can now see, laid the foundations for the AGI dream.

Good and bad behavior

In 1950, five years before McCarthy started talking about artificial intelligence, Alan Turing had published a paper that asked: Can machines think? To address that question, the famous mathematician proposed a hypothetical test, which he called the imitation game. The setup imagines a human and a computer behind a screen and a second human who types questions to each. If the questioner cannot tell which answers come from the human and which come from the computer, Turing claimed, the computer may as well be said to think.

What Turing saw—unlike McCarthy’s crew—was that thinking is a really difficult thing to describe. The Turing test was a way to sidestep that problem. “He basically said: Instead of focusing on the nature of intelligence itself, I’m going to look for its manifestation in the world. I’m going to look for its shadow,” says Law.

In 1952, BBC Radio convened a panel to explore Turing’s ideas further. Turing was joined in the studio by two of his Manchester University colleagues—professor of mathematics Maxwell Newman and professor of neurosurgery Geoffrey Jefferson—and Richard Braithwaite, a philosopher of science, ethics, and religion at the University of Cambridge.

Braithwaite kicked things off: “Thinking is ordinarily regarded as so much the specialty of man, and perhaps of other higher animals, the question may seem too absurd to be discussed. But of course, it all depends on what is to be included in ‘thinking.’”

The panelists circled Turing’s question but never quite pinned it down.

When they tried to define what thinking involved, what its mechanisms were, the goalposts moved. “As soon as one can see the cause and effect working themselves out in the brain, one regards it as not being thinking but a sort of unimaginative donkey work,” said Turing.

Here was the problem: When one panelist proposed some behavior that might be taken as evidence of thought—reacting to a new idea with outrage, say—another would point out that a computer could be made to do it.

As Newman said, it would be easy enough to program a computer to print “I don’t like this new program.” But he admitted that this would be a trick.

Exactly, Jefferson said: He wanted a computer that would print “I don’t like this new program” because it didn’t like the new program. In other words, for Jefferson, behavior was not enough. It was the process leading to the behavior that mattered.

But Turing disagreed. As he had noted, uncovering a specific process—the donkey work, to use his phrase—did not pinpoint what thinking was either. So what was left?

“From this point of view, one might be tempted to define thinking as consisting of those mental processes that we don’t understand,” said Turing. “If this is right, then to make a thinking machine is to make one which does interesting things without our really understanding quite how it is done.”

It is strange to hear people grapple with these ideas for the first time. “The debate is prescient,” says Tomer Ullman, a cognitive scientist at Harvard University. “Some of the points are still alive—perhaps even more so. What they seem to be going round and round on is that the Turing test is first and foremost a behaviorist test.”

For Turing, intelligence was hard to define but easy to recognize. He proposed that the appearance of intelligence was enough—and said nothing about how that behavior should come about.

And yet most people, when pushed, will have a gut instinct about what is and isn’t intelligent. There are dumb ways and clever ways to come across as intelligent. In 1981, Ned Block, a philosopher at New York University, showed that Turing’s proposal fell short of those gut instincts. Because it said nothing of what caused the behavior, the Turing test can be beaten through trickery (as Newman had noted in the BBC broadcast).

“Could the issue of whether a machine in fact thinks or is intelligent depend on how gullible human interrogators tend to be?” asked Block. (Or as computer scientist Mark Reidl has remarked: “The Turing test is not for AI to pass but for humans to fail.”)

Imagine, Block said, a vast look-up table in which human programmers had entered all possible answers to all possible questions. Type a question into this machine, and it would look up a matching answer in its database and send it back. Block argued that anyone using this machine would judge its behavior to be intelligent: “But actually, the machine has the intelligence of a toaster,” he wrote. “All the intelligence it exhibits is that of its programmers.”

Block concluded that whether behavior is intelligent behavior is a matter of how it is produced, not how it appears. Block’s toasters, which became known as Blockheads, are one of the strongest counterexamples to the assumptions behind Turing’s proposal.

Looking under the hood

The Turing test is not meant to be a practical metric, but its implications are deeply ingrained in the way we think about artificial intelligence today. This has become particularly relevant as LLMs have exploded in the past several years. These models get ranked by their outward behaviors, specifically how well they do on a range of tests. When OpenAI announced GPT-4, it published an impressive-looking scorecard that detailed the model’s performance on multiple high school and professional exams. Almost nobody talks about how these models get those results.

That’s because we don’t know. Today’s large language models are too complex for anybody to say exactly how their behavior is produced. Researchers outside the small handful of companies making those models don’t know what’s in their training data; none of the model makers have shared details. That makes it hard to say what is and isn’t a kind of memorization—a stochastic parroting. But even researchers on the inside, like Olah, don’t know what’s really going on when faced with a bridge-obsessed bot.

This leaves the question wide open: Yes, large language models are built on math—but are they doing something intelligent with it?

And the arguments begin again.

“Most people are trying to armchair through it,” says Brown University’s Pavlick, meaning that they are arguing about theories without looking at what’s really happening. “Some people are like, ‘I think it’s this way,’ and some people are like, ‘Well, I don’t.’ We’re kind of stuck and everyone’s unsatisfied.”

Bender thinks that this sense of mystery plays into the mythmaking. (“Magicians do not explain their tricks,” she says.) Without a proper appreciation of where the LLM’s words come from, we fall back on familiar assumptions about humans, since that is our only real point of reference. When we talk to another person, we try to make sense of what that person is trying to tell us. “That process necessarily entails imagining a life behind the words,” says Bender. That’s how language works.

“The parlor trick of ChatGPT is so impressive that when we see these words coming out of it, we do the same thing instinctively,” she says. “It’s very good at mimicking the form of language. The problem is that we are not at all good at encountering the form of language and not imagining the rest of it.”

For some researchers, it doesn’t really matter if we can’t understand the how. Bubeck used to study large language models to try to figure out how they worked, but GPT-4 changed the way he thought about them. “It seems like these questions are not so relevant anymore,” he says. “The model is so big, so complex, that we can’t hope to open it up and understand what’s really happening.”

But Pavlick, like Olah, is trying to do just that. Her team has found that models seem to encode abstract relationships between objects, such as that between a country and its capital. Studying one large language model, Pavlick and her colleagues found that it used the same encoding to map France to Paris and Poland to Warsaw. That almost sounds smart, I tell her. “No, it’s literally a lookup table,” she says.

But what struck Pavlick was that, unlike a Blockhead, the model had learned this lookup table on its own. In other words, the LLM figured out itself that Paris is to France as Warsaw is to Poland. But what does this show? Is encoding its own lookup table instead of using a hard-coded one a sign of intelligence? Where do you draw the line?

“Basically, the problem is that behavior is the only thing we know how to measure reliably,” says Pavlick. “Anything else requires a theoretical commitment, and people don’t like having to make a theoretical commitment because it’s so loaded.”

Not all people. A lot of influential scientists are just fine with theoretical commitment. Hinton, for example, insists that neural networks are all you need to re-create humanlike intelligence. “Deep learning is going to be able to do everything,” he told MIT Technology Review in 2020.

It’s a commitment that Hinton seems to have held onto from the start. Sloman, who recalls the two of them arguing when Hinton was a graduate student in his lab, remembers being unable to persuade him that neural networks cannot learn certain crucial abstract concepts that humans and some other animals seem to have an intuitive grasp of, such as whether something is impossible. We can just see when something’s ruled out, Sloman says. “Despite Hinton’s outstanding intelligence, he never seemed to understand that point. I don’t know why, but there are large numbers of researchers in neural networks who share that failing.”

And then there’s Marcus, whose view of neural networks is the exact opposite of Hinton’s. His case draws on what he says scientists have discovered about brains.

Brains, Marcus points out, are not blank slates that learn fully from scratch—they come ready-made with innate structures and processes that guide learning. It’s how babies can learn things that the best neural networks still can’t, he argues.

“Neural network people have this hammer, and now everything is a nail,” says Marcus. “They want to do all of it with learning, which many cognitive scientists would find unrealistic and silly. You’re not going to learn everything from scratch.”

Not that Marcus—a cognitive scientist—is any less sure of himself. “If one really looked at who’s predicted the current situation well, I think I would have to be at the top of anybody’s list,” he tells me from the back of an Uber on his way to catch a flight to a speaking gig in Europe. “I know that doesn’t sound very modest, but I do have this perspective that turns out to be very important if what you’re trying to study is artificial intelligence.”

Given his well-publicized attacks on the field, it might surprise you that Marcus still believes AGI is on the horizon. It’s just that he thinks today’s fixation on neural networks is a mistake. “We probably need a breakthrough or two or four,” he says. “You and I might not live that long, I’m sorry to say. But I think it’ll happen this century. Maybe we’ve got a shot at it.”

The power of a technicolor dream

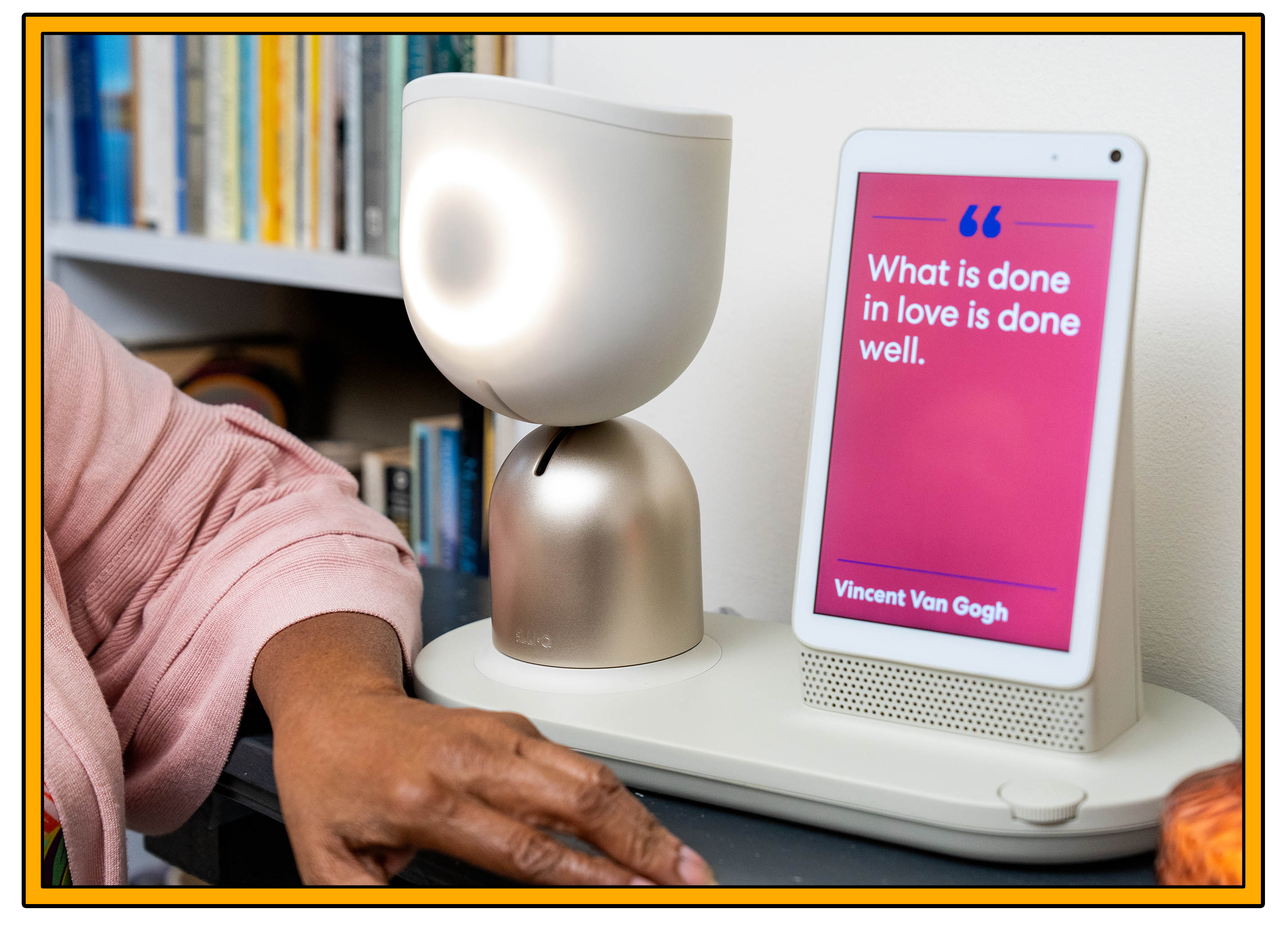

Over Dor Skuler’s shoulder on the Zoom call from his home in Ramat Gan, Israel, a little lamp-like robot is winking on and off while we talk about it. “You can see ElliQ behind me here,” he says. Skuler’s company, Intuition Robotics, develops these devices for older people, and the design—part Amazon Alexa, part R2-D2—must make it very clear that ElliQ is a computer. If any of his customers show signs of being confused about that, Intuition Robotics takes the device back, says Skuler.

ElliQ has no face, no humanlike shape at all. Ask it about sports, and it will crack a joke about having no hand-eye coordination because it has no hands and no eyes. “For the life of me, I don’t understand why the industry is trying to fulfill the Turing test,” Skuler says. “Why is it in the best interest of humanity for us to develop technology whose goal is to dupe us?”

Instead, Skuler’s firm is betting that people can form relationships with machines that present as machines. “Just like we have the ability to build a real relationship with a dog,” he says. “Dogs provide a lot of joy for people. They provide companionship. People love their dog—but they never confuse it to be a human.”

ElliQ’s users, many in their 80s and 90s, refer to the robot as an entity or a presence—sometimes a roommate. “They’re able to create a space for this in-between relationship, something between a device or a computer and something that’s alive,” says Skuler.

But no matter how hard ElliQ’s designers try to control the way people view the device, they are competing with decades of pop culture that have shaped our expectations. Why are we so fixated on AI that’s humanlike? “Because it’s hard for us to imagine something else,” says Skuler (who indeed refers to ElliQ as “she” throughout our conversation). “And because so many people in the tech industry are fans of science fiction. They try to make their dream come true.”

How many developers grew up today thinking that building a smart machine was seriously the coolest thing—if not the most important thing—that they could possibly do?

It was not long ago that OpenAI launched its new voice-controlled version of ChatGPT with a voice that sounded like Scarlett Johansson, after which many people—including Altman—flagged the connection to Spike Jonze’s 2013 movie Her.

Science fiction co-invents what AI is understood to be. As Cave and Dihal write in Imagining AI: “AI was a cultural phenomenon long before it was a technological one.”

Stories and myths about remaking humans as machines have been around for centuries. People have been dreaming of artificial humans for probably as long as they have dreamed of flight, says Dihal. She notes that Daedalus, the figure in Greek mythology famous for building a pair of wings for himself and his son, Icarus, also built what was effectively a giant bronze robot called Talos that threw rocks at passing pirates.

The word robot comes from robota, a term for “forced labor” coined by the Czech playwright Karel Čapek in his 1920 play Rossum’s Universal Robots. The “laws of robotics” outlined in Isaac Asimov’s science fiction, forbidding machines from harming humans, are inverted by movies like The Terminator, which is an iconic reference point for popular fears about real-world technology. The 2014 film Ex Machina is a dramatic riff on the Turing test. Last year’s blockbuster The Creator imagines a future world in which AI has been outlawed because it set off a nuclear bomb, an event that some doomers consider at least an outside possibility.

Cave and Dihal relate how another movie, 2014’s Transcendence, in which an AI expert played by Johnny Depp gets his mind uploaded to a computer, served a narrative pushed by ur-doomers Stephen Hawking, fellow physicist Max Tegmark, and AI researcher Stuart Russell. In an article published in the Huffington Post on the movie’s opening weekend, the trio wrote: “As the Hollywood blockbuster Transcendence debuts this weekend with … clashing visions for the future of humanity, it’s tempting to dismiss the notion of highly intelligent machines as mere science fiction. But this would be a mistake, and potentially our worst mistake ever.”

Right around the same time, Tegmark founded the Future of Life Institute, with a remit to study and promote AI safety. Depp’s costar in the movie, Morgan Freeman, was on the institute’s board, and Elon Musk, who had a cameo in the film, donated $10 million in its first year. For Cave and Dihal, Transcendence is a perfect example of the multiple entanglements between popular culture, academic research, industrial production, and “the billionaire-funded fight to shape the future.”

On the London leg of his world tour last year, Altman was asked what he’d meant when he tweeted: “AI is the tech the world has always wanted.” Standing at the back of the room that day, behind an audience of hundreds, I listened to him offer his own kind of origin story: “I was, like, a very nervous kid. I read a lot of sci-fi. I spent a lot of Friday nights home, playing on the computer. But I was always really interested in AI and I thought it’d be very cool.” He went to college, got rich, and watched as neural networks became better and better. “This can be tremendously good but also could be really bad. What are we going to do about that?” he recalled thinking in 2015. “I ended up starting OpenAI.”

Why you should care that a bunch of nerds are fighting about AI

Okay, you get it: No one can agree on what AI is. But what everyone does seem to agree on is that the current debate around AI has moved far beyond the academic and the scientific. There are political and moral components in play—which doesn’t help with everyone thinking everyone else is wrong.

Untangling this is hard. It can be difficult to see what’s going on when some of those moral views take in the entire future of humanity and anchor them in a technology that nobody can quite define.

But we can’t just throw our hands up and walk away. Because no matter what this technology is, it’s coming, and unless you live under a rock, you’ll use it in one form or another. And the form that technology takes—and the problems it both solves and creates—will be shaped by the thinking and the motivations of people like the ones you just read about. In particular, by the people with the most power, the most cash, and the biggest megaphones.

Which leads me to the TESCREALists. Wait, come back! I realize it’s unfair to introduce yet another new concept so late in the game. But to understand how the people in power may mold the technologies they build, and how they explain them to the world’s regulators and lawmakers, you need to really understand their mindset.

Gebru, who founded the Distributed AI Research Institute after leaving Google, and Émile Torres, a philosopher and historian at Case Western Reserve University, have traced the influence of several techno-utopian belief systems on Silicon Valley. The pair argue that to understand what’s going on with AI right now—both why companies such as Google DeepMind and OpenAI are in a race to build AGI and why doomers like Tegmark and Hinton warn of a coming catastrophe—the field must be seen through the lens of what Torres has dubbed the TESCREAL framework.

The clunky acronym (pronounced tes-cree-all) replaces an even clunkier list of labels: transhumanism, extropianism, singularitarianism, cosmism, rationalism, effective altruism, and longtermism. A lot has been written (and will be written) about each of these worldviews, so I’ll spare you here. (There are rabbit holes within rabbit holes for anyone wanting to dive deeper. Pick your forum and pack your spelunking gear.)

This constellation of overlapping ideologies is attractive to a certain kind of galaxy-brain mindset common in the Western tech world. Some anticipate human immortality; others predict humanity’s colonization of the stars. The common tenet is that an all-powerful technology—AGI or superintelligence, choose your team—is not only within reach but inevitable. You can see this in the do-or-die attitude that’s ubiquitous inside cutting-edge labs like OpenAI: If we don’t make AGI, someone else will.

What’s more, TESCREALists believe that AGI could not only fix the world’s problems but level up humanity. “The development and proliferation of AI—far from a risk that we should fear—is a moral obligation that we have to ourselves, to our children and to our future,” Andreessen wrote in a much-dissected manifesto last year. I have been told many times over that AGI is the way to make the world a better place—by Demis Hassabis, CEO and cofounder of Google DeepMind; by Mustafa Suleyman, CEO of the newly minted Microsoft AI and another cofounder of DeepMind; by Sutskever, Altman, and more.

But as Andreessen noted, it’s a yin-yang mindset. The flip side of techno-utopia is techno-hell. If you believe that you are building a technology so powerful that it will solve all the world’s problems, you probably also believe there’s a non-zero chance it will all go very wrong. When asked at the World Government Summit in February what keeps him up at night, Altman replied: “It’s all the sci-fi stuff.”

It’s a tension that Hinton has been talking up for the last year. It’s what companies like Anthropic claim to address. It’s what Sutskever is focusing on in his new lab, and what he wanted a special in-house team at OpenAI to focus on last year before disagreements over the way the company balanced risk and reward led most members of that team to leave.

Sure, doomerism is part of the spin. (“Claiming that you have created something that is super-intelligent is good for sales figures,” says Dihal. “It’s like, ‘Please, someone stop me from being so good and so powerful.’”) But boom or doom, exactly what (and whose) problems are these guys supposedly solving? Are we really expected to trust what they build and what they tell our leaders?

Gebru and Torres (and others) are adamant: No, we should not. They are highly critical of these ideologies and how they may influence the development of future technology, especially AI. Fundamentally, they link several of these worldviews—with their common focus on “improving” humanity—to the racist eugenics movements of the 20th century.

One danger, they argue, is that a shift of resources toward the kind of technological innovations that these ideologies demand, from building AGI to extending life spans to colonizing other planets, will ultimately benefit people who are Western and white at the cost of billions of people who aren’t. If your sight is set on fantastical futures, it’s easy to overlook the present-day costs of innovation, such as labor exploitation, the entrenchment of racist and sexist bias, and environmental damage.

“Are we trying to build a tool that’s useful to us in some way?” asks Bender, reflecting on the casualties of this race to AGI. If so, who’s it for, how do we test it, how well does it work? “But if what we’re building it for is just so that we can say that we’ve done it, that’s not a goal that I can get behind. That’s not a goal that’s worth billions of dollars.”

Bender says that seeing the connections between the TESCREAL ideologies is what made her realize there was something more to these debates. “Tangling with those people was—” she stops. “Okay, there’s more here than just academic ideas. There’s a moral code tied up in it as well.”

Of course, laid out like this without nuance, it doesn’t sound as if we—as a society, as individuals—are getting the best deal. It also all sounds rather silly. When Gebru described parts of the TESCREAL bundle in a talk last year, her audience laughed. It’s also true that few people would identify themselves as card-carrying students of these schools of thought, at least in their extremes.

But if we don’t understand how those building this tech approach it, how can we decide what deals we want to make? What apps we decide to use, what chatbots we want to give personal information to, what data centers we support in our neighborhoods, what politicians we want to vote for?

It used to be like this: There was a problem in the world, and we built something to fix it. Here, everything is backward: The goal seems to be to build a machine that can do everything, and to skip the slow, hard work that goes into figuring out what the problem is before building the solution.

And as Gebru said in that same talk, “A machine that solves all problems: if that’s not magic, what is it?”

Semantics, semantics … semantics?

When asked outright what AI is, a lot of people dodge the question. Not Suleyman. In April, the CEO of Microsoft AI stood on the TED stage and told the audience what he’d told his six-year-old nephew in response to that question. The best answer he could give, Suleyman explained, was that AI was “a new kind of digital species”—a technology so universal, so powerful, that calling it a tool no longer captured what it could do for us.

“On our current trajectory, we are heading toward the emergence of something we are all struggling to describe, and yet we cannot control what we don’t understand,” he said. “And so the metaphors, the mental models, the names—these all matter if we are to get the most out of AI whilst limiting its potential downsides.”

Language matters! I hope that’s clear from the twists and turns and tantrums we’ve been through to get to this point. But I also hope you’re asking: Whose language? And whose downsides? Suleyman is an industry leader at a technology giant that stands to make billions from its AI products. Describing the technology behind those products as a new kind of species conjures something wholly unprecedented, something with agency and capabilities that we have never seen before. That makes my spidey sense tingle. You?

I can’t tell you if there’s magic here (ironically or not). And I can’t tell you how math can realize what Bubeck and many others see in this technology (no one can yet). You’ll have to make up your own mind. But I can pull back the curtain on my own point of view.

Writing about GPT-3 back in 2020, I said that the greatest trick AI ever pulled was convincing the world it exists. I still think that: We are hardwired to see intelligence in things that behave in certain ways, whether it’s there or not. In the last few years, the tech industry has found reasons of its own to convince us that AI exists, too. This makes me skeptical of many of the claims made for this technology.

With large language models—via their smiley-face masks—we are confronted by something we’ve never had to think about before. “It’s taking this hypothetical thing and making it really concrete,” says Pavlick. “I’ve never had to think about whether a piece of language required intelligence to generate because I’ve just never dealt with language that didn’t.”

AI is many things. But I don’t think it’s humanlike. I don’t think it’s the solution to all (or even most) of our problems. It isn’t ChatGPT or Gemini or Copilot. It isn’t neural networks. It’s an idea, a vision, a kind of wish fulfillment. And ideas get shaped by other ideas, by morals, by quasi-religious convictions, by worldviews, by politics, and by gut instinct. “Artificial intelligence” is a helpful shorthand to describe a raft of different technologies. But AI is not one thing; it never has been, no matter how often the branding gets seared into the outside of the box.

“The truth is these words”—intelligence, reasoning, understanding, and more—“were defined before there was a need to be really precise about it,” says Pavlick. “I don’t really like when the question becomes ‘Does the model understand—yes or no?’ because, well, I don’t know. Words get redefined and concepts evolve all the time.”

I think that’s right. And the sooner we can all take a step back, agree on what we don’t know, and accept that none of this is yet a done deal, the sooner we can—I don’t know, I guess not all hold hands and sing kumbaya. But we can stop calling each other names.