Subtotal: 4.079,64€ (incl. VAT)

The NYPD used a controversial facial recognition tool. Here’s what you need to know.

It’s been a busy week for Clearview AI, the controversial facial recognition company that uses 3 billion photos scraped from the web to power a search engine for faces. On April 6, Buzzfeed News published a database of over 1,800 entities—including state and local police and other taxpayer-funded agencies such as health-care systems and public schools—that it says have used the company’s controversial products. Many of those agencies replied to the accusations by saying they had only trialed the technology and had no formal contract with the company.

But the day before, the definition of a “trial” with Clearview was detailed when nonprofit news site Muckrock released emails between the New York Police Department and the company. The documents, obtained through freedom of information requests by the Legal Aid Society and journalist Rachel Richards, track a friendly two-year relationship between the department and the tech company during which time NYPD tested the technology many times, and used facial recognition in live investigations.

The NYPD has previously downplayed its relationship with Clearview AI and its use of the company’s technology. But the emails show that the relationship between them was well developed, with a large number of police officers conducting a high volume of searches with the app and using them in real investigations. The NYPD has run over 5,100 searches with Clearview AI.

This is particularly problematic because stated policies limit the NYPD from creating an unsupervised repository of photos that facial recognition systems can reference, and restrict the use of facial recognition technology to a specific team. Both policies seem to have been circumvented with Clearview AI. The emails reveal that the NYPD gave many officers outside the facial recognition team access to the system, which relies on a huge library of public photos from social media. The emails also show how NYPD officers downloaded the app onto their personal devices, in contravention of stated policy, and used the powerful and biased technology in a casual fashion.

Clearview AI runs a powerful neural network that processes photographs of faces and compares their precise measurement and symmetry with a massive database of pictures to suggest possible matches. It’s unclear just how accurate the technology is, but it’s widely used by police departments and other government agencies. Clearview AI has been heavily criticized for its use of personally identifiable information, its decision to violate people’s privacy by scraping photographs from the internet without their permission, and its choice of clientele.

The emails span a period from October 2018 through February 2020, beginning when Clearview AI CEO Hoan Ton-That was introduced to NYPD deputy inspector Chris Flanagan. After initial meetings, Clearview AI entered into a vendor contract with NYPD in December 2018 on a trial basis that lasted until the following March.

The documents show that many individuals at NYPD had access to Clearview during and after this time, from department leadership to junior officers. Throughout the exchanges, Clearview AI encouraged more use of its services. (“See if you can reach 100 searches,” its onboarding instructions urged officers.) The emails show that trial accounts for the NYPD were created as late as February 2020, almost a year after the trial period was said to have ended.

We reviewed the emails, and talked to top surveillance and legal experts about their contents. Here’s what you need to know.

NYPD lied about the extent of its relationship with Clearview AI and the use of its facial recognition technology

The NYPD told BuzzFeed News and the New York Post previously that it had “no institutional relationship” with Clearview AI, “formally or informally.” The department did disclose that it had trialed Clearview AI, but the emails show that the technology was used over a sustained time period by a large number of people who completed a high volume of searches in real investigations.

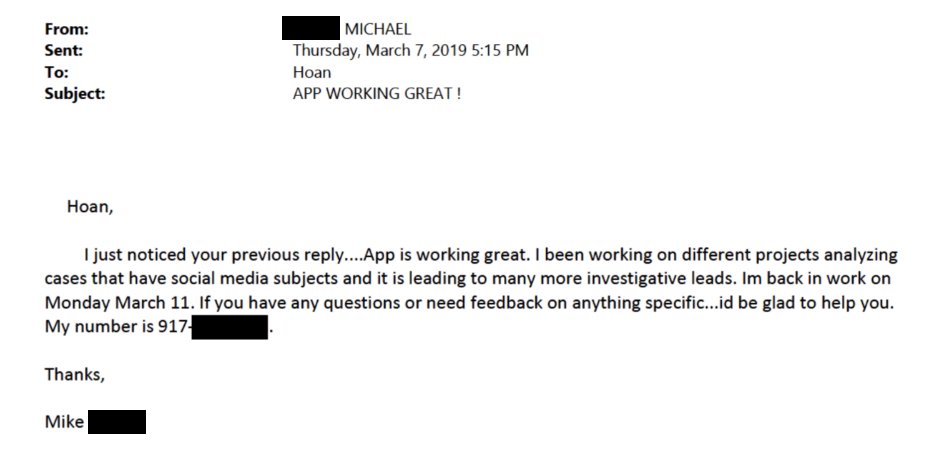

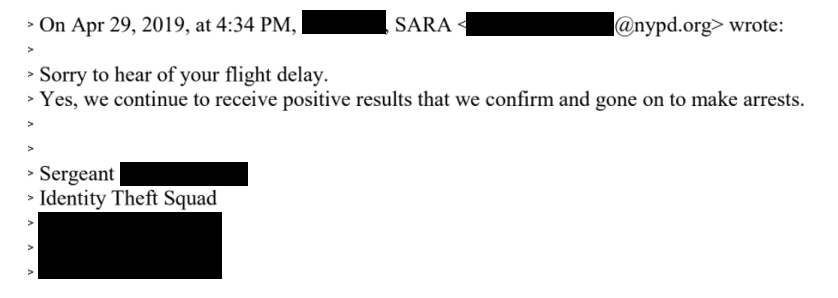

In one exchange, a detective working in the department’s facial recognition unit said, “App is working great.” In another, an officer on the NYPD’s identity theft squad said that “we continue to receive positive results” and have “gone on to make arrests.” (We have removed full names and email addresses from these images; other personal details were redacted in the original documents.)

Albert Fox Cahn, executive director at the Surveillance Technology Oversight Project, a nonprofit that advocates for the abolition of police use of facial recognition technology in New York City, says the records clearly contradict NYPD’s previous public statements on its use of Clearview AI.

“Here we have a pattern of officers getting Clearview accounts—not for weeks or months, but over the course of years,” he says. “We have evidence of meetings with officials at the highest level of the NYPD, including the facial identification section. This isn’t a few officers who decide to go off and get a trial account. This was a systematic adoption of Clearview’s facial recognition technology to target New Yorkers.”

Further, NYPD’s description of its facial recognition use, which is required under a recently passed law, says that “investigators compare probe images obtained during investigations with a controlled and limited group of photographs already within possession of the NYPD.” Clearview AI is known for its database of over 3 billion photos scraped from the web.

NYPD is working closely with immigration enforcement, and officers referred Clearview AI to ICE

The documents contain multiple emails from the NYPD that appear to be referrals to aid Clearview in selling its technology to the Department of Homeland Security. Two police officers had both NYPD and Homeland Security affiliations in their email signature, while another officer identified as a member of a Homeland Security task force.

“There just seems to be so much communication, maybe data sharing, and so much unregulated use of technology.”

New York is designated as a sanctuary city, meaning that local law enforcement limits its cooperation with federal immigration agencies. In fact, NYPD’s facial recognition policy statement says that “information is not shared in furtherance of immigration enforcement” and “access will not be given to other agencies for purposes of furthering immigration enforcement.”

“I think one of the big takeaways is just how lawless and unregulated the interactions and surveillance and data sharing landscape is between local police, federal law enforcement, immigration enforcement,” says Matthew Guariglia, an analyst at the Electronic Frontier Foundation. “There just seems to be so much communication, maybe data sharing, and so much unregulated use of technology.”

Cahn says the emails immediately ring alarm bells, particularly since a great deal of law enforcement information funnels through central systems known as fusion centers.

“You can claim you’re a sanctuary city all you want, but as long as you continue to have these DHS task forces, as long as you continue to have information fusion centers that allow real-time data exchange with DHS, you’re making that promise into a lie.”

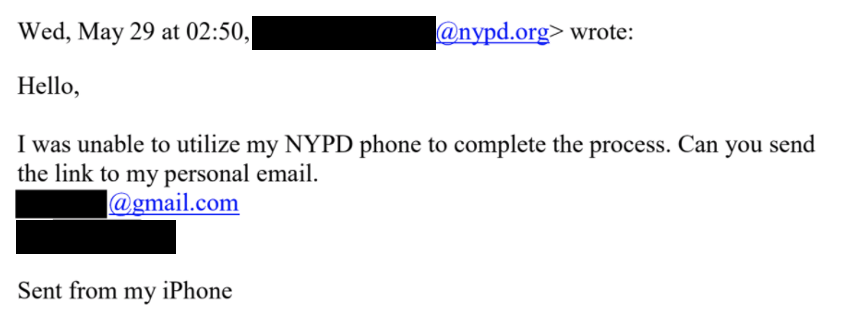

Many officers asked to use Clearview AI on their personal devices or through their personal email accounts

At least four officers asked for access to Clearview’s app on their personal devices or through personal emails. Department devices are closely regulated, and it can be difficult to download applications to official NYPD mobile phones. Some officers clearly opted to use their personal devices when department phones were too restrictive.

Clearview replied to this email, “Hi William, you should have a setup email in your inbox shortly.”

Jonathan McCoy is a digital forensics attorney at Legal Aid Society and took part in filing the freedom of information request. He found the use of personal devices particularly troublesome: “My takeaway is that they were actively trying to circumvent NYPD policies and procedures that state that if you’re going to be using facial recognition technology, you have to go through FIS (facial identification section) and they have to use the technology that’s already been approved by the NYPD wholesale.” NYPD does already have a facial recognition system, provided by a company called Dataworks.

Guariglia says it points to an attitude of carelessness by both the NYPD and Clearview AI. “I would be horrified to learn that police officers were using Clearview on their personal devices to identify people that then contributed to arrests or official NYPD investigations,” he says.

The concerns these emails raise are not just theoretical: they could allow the police to be challenged in court, and even have cases overturned because of failure to adhere to procedure. McCoy says the Legal Aid Society plans to use the evidence from the emails to defend clients who have been arrested as the result of an investigation that used facial recognition.

“We would hopefully have a basis to go into court and say that whatever conviction was obtained through the use of the software was done in a way that was not commensurate with NYPD policies and procedures,” he says. “Since Clearview is an untested and unreliable technology, we could argue that the use of such a technology prejudiced our client’s rights.”