The modern enterprise imaging and data value chain

During the past two decades, the health care sector has undergone a rapid and far-reaching digital transformation. But digitalization has generated a new challenge: information overload. According to one estimate, the volume of health care-related data being generated digitally doubles every 73 days. Much of it is stored in discrete silos—such as digital imaging and communications in medicine (DICOM) images, reports, multimedia data, and data from different sources at multiple departments—that make cross-system access difficult.

Meanwhile, powerful diagnostic tools often lack interoperability. The result: instead of supporting informed and actionable decision-making, the digital revolution too often hinders more efficient diagnosis or improved patient care.

Productivity improvements have helped a wide range of industries—except the health care industry. From 1999 to 2014, productivity in the health care sector increased by just 8%, whereas other industries achieved far greater efficiency gains of 18%. While productivity comparisons between industries tend to be inaccurate, they do show health care lags far behind other industries in terms of productivity and potential.

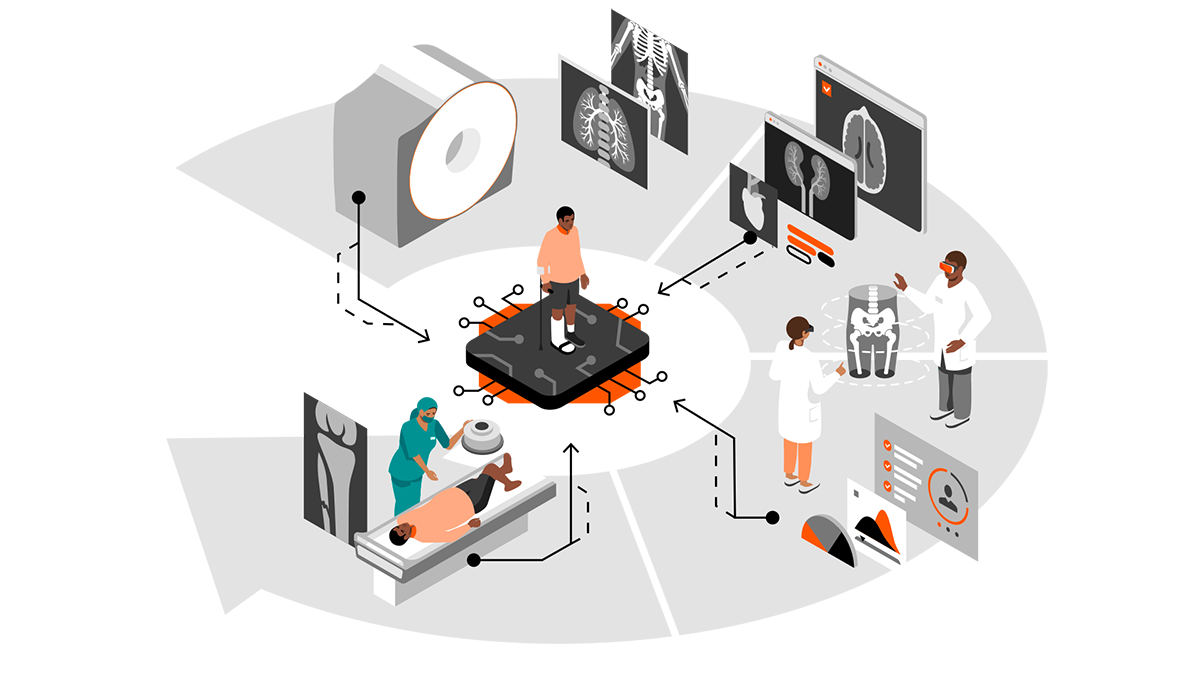

To operationally improve productivity in health care, two things must happen. First, data must be understood as a strategic asset. Data must be leveraged through intelligent and all-encompassing workflow solutions, as well as the use of artificial intelligence (AI)—driving automation and putting the patient at the center of the imaging value chain.

Second, to be able to speak of a value chain at all, the fields of competencies must be connected. The connection must be as seamless, open, and secure as possible. The goal is to ensure that all relevant data is available when needed by patients, health care professionals, and medical researchers alike.

A modern enterprise imaging software solution must prioritize outcome optimization, improved diagnostics, and enhanced collaboration.

Health care today: gaps, bottlenecks, silos

The costs and consequences of the current fragmented state of health care data are far-reaching: operational inefficiencies and unnecessary duplication, treatment errors, and missed opportunities for basic research. Recent medical literature is filled with examples of missed opportunities—and patients put at risk because of a lack of data sharing.

More than four million Medicare patients are discharged to skilled nursing facilities (SNFs) every year. Most of them are elderly patients with complex conditions, and the transition can be hazardous. According to a 2019 study published in the American Journal of Managed Care, one of the main reasons patients fare poorly during this transition is a lack of health data sharing—including missing, delayed, or difficult-to-use information—between hospitals and SNFs. “Weak transitional care practices between hospitals and SNFs compromise quality and safety outcomes for this population,” researchers noted.

Even within hospitals, sharing data remains a major problem. A 2019 American Hospital Association study published in the journal Healthcare analyzed interoperability functions that are part of the Promoting Interoperability program, administered by the U.S. Centers for Medicare & Medicaid Services (CMS) and adopted by qualifying U.S. hospitals. The study showed that among 2,781 non-federal, acute-care hospitals, only 16.7% had adopted all six core functionalities required to meet the program’s Stage 3 certified electronic health record technology (CEHRT) objectives. Data interoperability in health care is not a matter of course.

Data silos and incompatible data sets remain another roadblock. In a 2019 article in the journal JCO Clinical Cancer Informatics, researchers analyzed data from the Cancer Imaging Archive (TCIA), looking specifically at nine lung and brain research data sets containing 659 data fields in order to understand what would be required to harmonize data for cross-study access. The effort took more than 329 hours over six months, simply to identify 41 overlapping data fields in three or more files, and to harmonize 31 of them.

As researchers wrote in an August 2019 article in Nature Digital Medicine, “[i]n the 21st century, the age of big data and artificial intelligence, each health care organization has built its own data infrastructure to support its own needs, typically involving on-premises computing and storage. Data is balkanized along organizational boundaries, severely constraining the ability to provide services to patients across a care continuum within one organization or across organizations.”

Focus on outcomes, and be smart

What can be done to bridge these gaps? Technology innovators and health care IT experts have already taken up the challenge. Important progress is being made on many fronts. Along the way, several key best practices have emerged, including:

- Ensure appropriateness via connectivity for patient pathways and a smart imaging value chain: Historically, digital imaging and other data have been siloed within a particular department—radiology, cardiology, orthopedics or oncology, for example. In the future, a patient’s data will follow that patient across all health care encounters and all specialties, using an open patient data model. This also includes clinical departments that just started the digital transformation, such as pathology.

- Manage the data load to support diagnostic outcomes: Too often clinicians must shift through reams of irrelevant data to find the key information they need to meet actionable decisions. Decision support software is proving to be a powerful tool to identify and highlight key diagnostic findings, delivering the data physicians need at the push of a button. It has proven that integrated state-of-the-art AI technologies are a key enabler for workflow automation and clinical efficiency gains.

- Secure access and connectivity to different interoperability standards: To address the continuing challenge of interoperability and ensure that all the parts of the system use the same syntax and speak the same language, central core software modules will be used to translate data coming in from a variety of sources, including third party vendor offerings. As these software modules expand, they will be able to make more and more connections, incorporating more and more data and functionality across medical specialties. At the same time, standardization increases security by providing a common technological basis. It also ensures continuous updates and improvements in all areas in the shortest possible time.

- Connect care systems and drive collaborative outcomes: The goal is to provide, together in one comprehensive interface, the data that physicians need, the tools they need to analyze the available data, and to require an actionable decision. The same principle positions everyone, from every specialty, as equal players in a patient’s care. Using a single interface, all the members of the care team will be able to participate throughout the course of care delivery. It doesn’t matter from which location data and devices are accessed. Remote solutions will be an essential building block.

What’s next?

We all feel the need for change in health care. The question is not whether something must change. It is a question of how we shape this process. Software, as in many other industries, is the central building block on which health care will be based in the 21st century. The way there for health care strongly depends on health care provider strategy.

Learn how Syngo Carbon and Siemens Healthineers built an outcome-driven imaging system (ODIS) to help achieve this change.

This content was produced by Siemens Healthineers. It was not written by MIT Technology Review’s editorial staff.