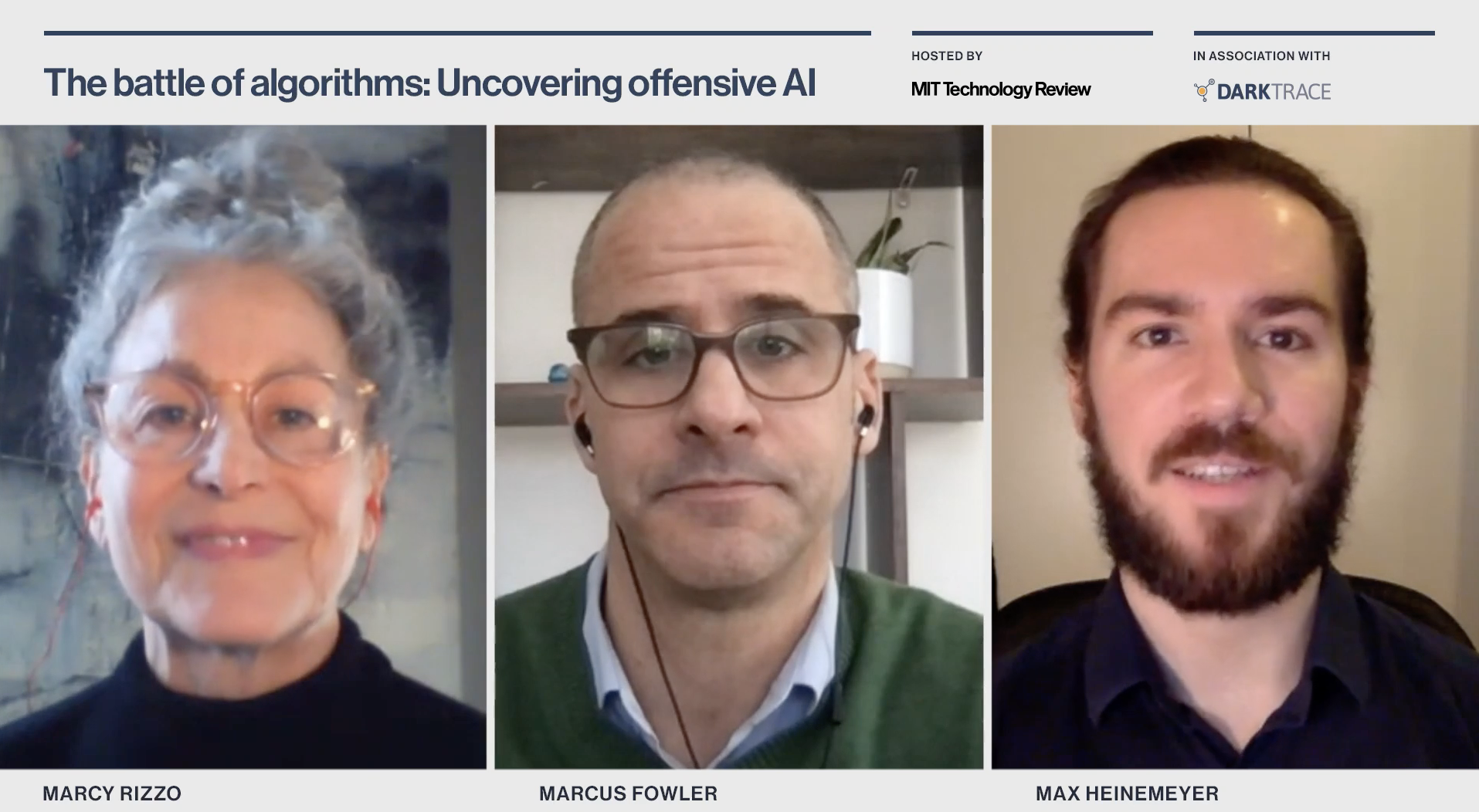

The battle of algorithms: Uncovering offensive AI

As machine-learning applications move into the mainstream, a new era of cyber threat is emerging—one that uses offensive artificial intelligence (AI) to supercharge attack campaigns. Offensive AI allows attackers to automate reconnaissance, craft tailored impersonation attacks, and even self-propagate to avoid detection. Security teams can prepare by turning to defensive AI to fight back—using autonomous cyber defense that learns on the job to detect and respond to even the most subtle indicators of an attack, no matter where it appears.

MIT Technology Review recently sat down with experts from Darktrace—Marcus Fowler, director of strategic threat, and Max Heinemeyer, director of threat hunting—to discuss the current and emerging applications of offensive AI, defensive AI, and the ongoing battle of algorithms between the two.

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.