Subtotal: 175,57€ (incl. VAT)

Preparing for AI-enabled cyberattacks

Cyberattacks continue to grow in prevalence and sophistication. With the ability to disrupt business operations, wipe out critical data, and cause reputational damage, they pose an existential threat to businesses, critical services, and infrastructure. Today’s new wave of attacks is outsmarting and outpacing humans, and even starting to incorporate artificial intelligence (AI). What’s known as “offensive AI” will enable cybercriminals to direct targeted attacks at unprecedented speed and scale while flying under the radar of traditional, rule-based detection tools.

Some of the world’s largest and most trusted organizations have already fallen victim to damaging cyberattacks, undermining their ability to safeguard critical data. With offensive AI on the horizon, organizations need to adopt new defenses to fight back: the battle of algorithms has begun.

MIT Technology Review Insights, in association with AI cybersecurity company Darktrace, surveyed more than 300 C-level executives, directors, and managers worldwide to understand how they’re addressing the cyberthreats they’re up against—and how to use AI to help fight against them.

As it is, 60% of respondents report that human-driven responses to cyberattacks are failing to keep up with automated attacks, and as organizations gear up for a greater challenge, more sophisticated technologies are critical. In fact, an overwhelming majority of respondents—96%—report they’ve already begun to guard against AI-powered attacks, with some enabling AI defenses.

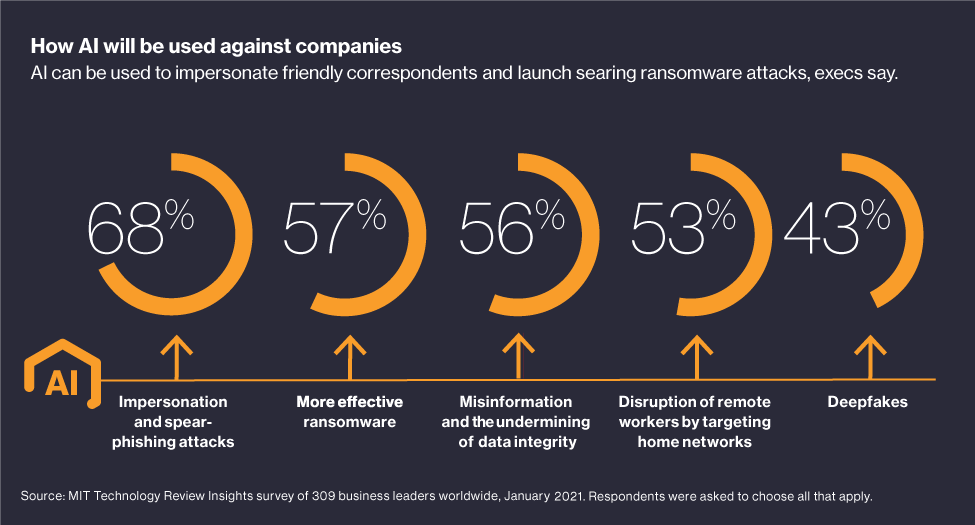

Offensive AI cyberattacks are daunting, and the technology is fast and smart. Consider deepfakes, one type of weaponized AI tool, which are fabricated images or videos depicting scenes or people that were never present, or even existed.

In January 2020, the FBI warned that deepfake technology had already reached the point where artificial personas could be created that could pass biometric tests. At the rate that AI neural networks are evolving, an FBI official said at the time, national security could be undermined by high-definition, fake videos created to mimic public figures so that they appear to be saying whatever words the video creators put in their manipulated mouths.

This is just one example of the technology being used for nefarious purposes. AI could, at some point, conduct cyberattacks autonomously, disguising their operations and blending in with regular activity. The technology is out there for anyone to use, including threat actors.

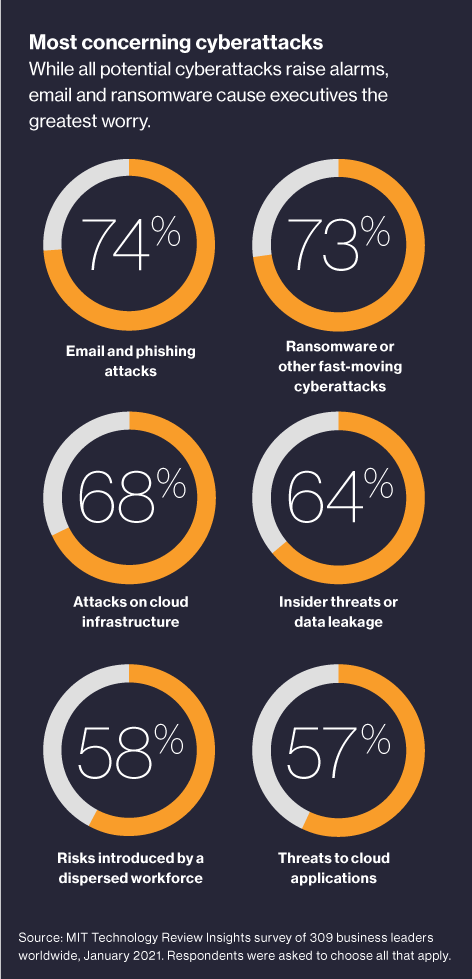

Offensive AI risks and developments in the cyberthreat landscape are redefining enterprise security, as humans already struggle to keep pace with advanced attacks. In particular, survey respondents reported that email and phishing attacks cause them the most angst, with nearly three quarters reporting that email threats are the most worrisome. That breaks down to 40% of respondents who report finding email and phishing attacks “very concerning,” while 34% call them “somewhat concerning.” It’s not surprising, as 94% of detected malware is still delivered by email. The traditional methods of stopping email-delivered threats rely on historical indicators—namely, previously seen attacks—as well as the ability of the recipient to spot the signs, both of which can be bypassed by sophisticated phishing incursions.

When offensive AI is thrown into the mix, “fake email” will be almost indistinguishable from genuine communications from trusted contacts.

How attackers exploit the headlines

The coronavirus pandemic presented a lucrative opportunity for cybercriminals. Email attackers in particular followed a long-established pattern: take advantage of the headlines of the day—along with the fear, uncertainty, greed, and curiosity they incite—to lure victims in what has become known as “fearware” attacks. With employees working remotely, without the security protocols of the office in place, organizations saw successful phishing attempts skyrocket. Max Heinemeyer, director of threat hunting for Darktrace, notes that when the pandemic hit, his team saw an immediate evolution of phishing emails. “We saw a lot of emails saying things like, ‘Click here to see which people in your area are infected,’” he says. When offices and universities started reopening last year, new scams emerged in lockstep, with emails offering “cheap or free covid-19 cleaning programs and tests,” says Heinemeyer.

There has also been an increase in ransomware, which has coincided with the surge in remote and hybrid work environments. “The bad guys know that now that everybody relies on remote work. If you get hit now, and you can’t provide remote access to your employee anymore, it’s game over,” he says. “Whereas maybe a year ago, people could still come into work, could work offline more, but it hurts much more now. And we see that the criminals have started to exploit that.”

What’s the common theme? Change, rapid change, and—in the case of the global shift to working from home—complexity. And that illustrates the problem with traditional cybersecurity, which relies on traditional, signature-based approaches: static defenses aren’t very good at adapting to change. Those approaches extrapolate from yesterday’s attacks to determine what tomorrow’s will look like. “How could you anticipate tomorrow’s phishing wave? It just doesn’t work,” Heinemeyer says.

Download the full report.

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.