Inside the bitter campus privacy battle over smart building sensors

When computer science students and faculty at Carnegie Mellon University’s Institute for Software Research returned to campus in the summer of 2020, there was a lot to adjust to.

Beyond the inevitable strangeness of being around colleagues again after months of social distancing, the department was also moving into a brand-new building: the 90,000-square-foot, state-of-the-art TCS Hall.

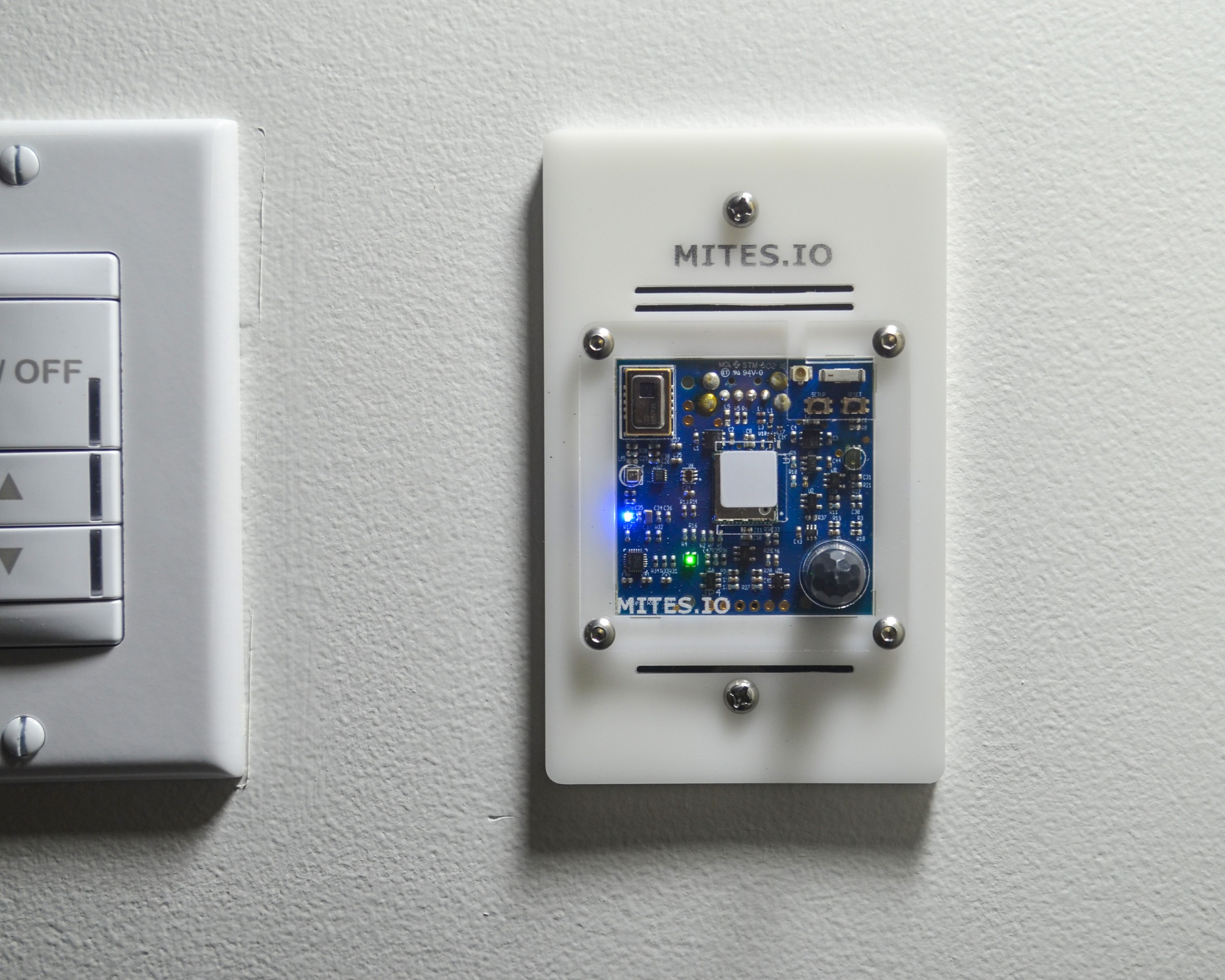

The hall’s futuristic features included carbon dioxide sensors that automatically pipe in fresh air, a rain garden, a yard for robots and drones, and experimental super-sensing devices called Mites. Mounted in more than 300 locations throughout the building, these light-switch-size devices can measure 12 types of data—including motion and sound. Mites were embedded on the walls and ceilings of hallways, in conference rooms, and in private offices, all as part of a research project on smart buildings led by CMU professor Yuvraj Agarwal and PhD student Sudershan Boovaraghavan and including another professor, Chris Harrison.

“The overall goal of this project,” Agarwal explained at an April 2021 town hall meeting for students and faculty, is to “build a safe, secure, and easy-to-use IoT [Internet of Things] infrastructure,” referring to a network of sensor-equipped physical objects like smart light bulbs, thermostats, and TVs that can connect to the internet and share information wirelessly.

Not everyone was pleased to find the building full of Mites. Some in the department felt that the project violated their privacy rather than protected it. In particular, students and faculty whose research focused more on the social impacts of technology felt that the device’s microphone, infrared sensor, thermometer, and six other sensors, which together could at least sense when a space was occupied, would subject them to experimental surveillance without their consent.

“It’s not okay to install these by default,” says David Widder, a final-year PhD candidate in software engineering, who became one of the department’s most vocal voices against Mites. “I don’t want to live in a world where one’s employer installing networked sensors in your office without asking you first is a model for other organizations to follow.”

All technology users face similar questions about how and where to draw a personal line when it comes to privacy. But outside of our own homes (and sometimes within them), we increasingly lack autonomy over these decisions. Instead, our privacy is determined by the choices of the people around us. Walking into a friend’s house, a retail store, or just down a public street leaves us open to many different types of surveillance over which we have little control.

Against a backdrop of skyrocketing workplace surveillance, prolific data collection, increasing cybersecurity risks, rising concerns about privacy and smart technologies, and fraught power dynamics around free speech in academic institutions, Mites became a lightning rod within the Institute for Software Research.

Voices on both sides of the issue were aware that the Mites project could have an impact far beyond TCS Hall. After all, Carnegie Mellon is a top-tier research university in science, technology, and engineering, and how it handles this research may influence how sensors will be deployed elsewhere. “When we do something, companies … [and] other universities listen,” says Widder.

Indeed, the Mites researchers hoped that the process they’d gone through “could actually be a blueprint for smaller universities” looking to do similar research, says Agarwal, an associate professor in computer science who has been developing and testing machine learning for IoT devices for a decade.

But the crucial question is what happens if—or when—the super-sensors graduate from Carnegie Mellon, are commercialized, and make their way into smart buildings the world over.

The conflict is, in essence, an attempt by one of the world’s top computer science departments to litigate thorny questions around privacy, anonymity, and consent. But it has deteriorated from an academic discussion into a bitter dispute, complete with accusations of bullying, vandalism, misinformation, and workplace retaliation. As in so many conversations about privacy, the two sides have been talking past each other, with seemingly incompatible conceptions of what privacy means and when consent should be required.

Ultimately, if the people whose research sets the agenda for technology choices are unable to come to a consensus on privacy, where does that leave the rest of us?

The future, according to Mites

The Mites project was based on two basic premises: First, that buildings everywhere are already collecting data without standard privacy protections and will continue to do so. And second, that the best solution is to build better sensors—more useful, more efficient, more secure, and better-intentioned.

In other words, Mites.

“What we really need,” Agarwal explains, is to “build out security-, privacy-, safety-first systems … make sure that users have trust in these systems and understand the clear value proposition.”

“I would rather [we] be leading it than Google or ExxonMobil,” adds Harrison, an associate professor of human-computer interaction and a faculty collaborator on the project, referring to sensor research. (Google funded early iterations of the research that led to Mites, while JPMorgan Chase is providing “generous support of smart building research at TCS Hall,” as noted on plaques hung around the building.)

Mites—the name refers to both the individual devices and the overall platform—are all-in-one sensors supported by a hardware stack and on-device data processing. While Agarwal says they were not named after the tiny creature, the logo on the project’s website depicts a bug.

According to the researchers, Mites represent a significant improvement over current building sensors, which typically have a singular purpose—like motion detectors or thermometers. In addition, many smart devices today often only working in isolation or with specific platforms like Google’s Nest or Amazon’s Alexa; they can’t interact with each other.

Additionally, current IoT systems offer little transparency about exactly what data is being collected, how it is being transmitted, and what security protocols are in place—while erring on the side of over-collection.

The researchers hoped Mites would address these shortcomings and facilitate new uses and applications for IoT sensors. For example, microphones on Mites could help students find a quiet room to study, they said—and Agarwal suggested at the town hall meeting in April 2021 that the motion sensor could tell an office occupant whether custodial staff were actually cleaning offices each night. (The researchers have since said this was a suggested use case specific to covid-19 protocols and that it could help cleaning staff focus on high-traffic areas—but they have moved away from the possibility.)

The researchers also believe that in the long term, Mites—and building sensors more generally—are key to environmental sustainability. They see other, more ambitious use cases too. A university write-up describes this scenario: In 2050, a woman starts experiencing memory loss. Her doctor suggests installing Mites around her home to “connect to … smart speakers and tell her when her laundry is done and when she’s left the oven on” or to evaluate her sleep by noting the sound of sheets ruffling or nighttime trips to the bathroom. “They are helpful to Emily, but even more helpful to her doctor,” the article claims.

As multipurpose devices integrated with a platform, Mites were supposed to solve all sorts of problems without going overboard on data collection. Each device contains nine sensors that can pick up all sorts of ambient information about a room, including sound, light, vibrations, motion, temperature, and humidity—a dozen different types of data in all. To protect privacy, it does not capture video or photos.

The CMU researchers are not the first to attempt such a project. An IoT research initiative out of the Massachusetts Institute of Technology, similarly called MITes, designed portable sensors to collect environmental data like movement and temperature. It ran from 2005 to 2016, primarily as part of PlaceLab, a experimental laboratory modeled after an apartment in which carefully vetted volunteers consented to live and have their interactions studied. The MIT and CMU projects are unrelated. (MIT Technology Review is funded in part by MIT but maintains editorial independence.)

The Carnegie Mellon researchers say the Mites system extracts only some of the data the devices collect, through a technical process called “featurization.” This should make it more difficult to trace, say, a voice back to an individual.

Machine learning—which, through a technique called edge computing, would eventually take place on the device rather than on a centralized server—then recognizes the incoming data as the result of certain activities. The hope is that a particular set of vibrations could be translated in real time into, for example, a train passing by.

The researchers say that featurization and other types of edge computing will make Mites more privacy-protecting, since these technologies minimize the amount of data that must be sent, processed, and stored in the cloud. (At the moment, machine learning is still taking place on a separate server on campus.)

“Our vision is that there’s one sensor to rule them all, if you’ve seen Lord of the Rings. The idea is rather than this heterogeneous collection of sensors, you have one sensor that’s in a two-inch-by-two-inch package,” Agarwal explained in the April 2021 town hall, according to a recording of the meeting shared with MIT Technology Review.

But if the departmental response is any indication, maybe a ring of power that let its wearer achieve domination over others wasn’t the best analogy.

A tense town hall

Unless you are looking for them, you might not know that the bright and airy TCS Hall, on the western edge of Carnegie Mellon’s Pittsburgh campus, is covered in Mites devices—314 of them as of February 2023, according to Agarwal.

But look closely, and they are there: small square circuit boards encased in plastic and mounted onto standard light switch plates. They’re situated inside the entrances of common rooms and offices, by the thermostats and light controls, and in the ceilings.

The only locations in TCS Hall that are Mites-free, in fact, are the bathrooms—and the fifth floor, where Tata Consultancy Services, the Indian multinational IT company that donated $35 million to fund the building bearing its name, runs a research and innovation center. (A spokesperson said, “TCS is not involved in the Mites project.”)

Widder, whose PhD thesis focuses on how to help AI developers think about their responsibility for the harm their work could cause, remembers finding out about the Mites sensors in his office sometime in fall of 2020. And once he noticed them, he couldn’t unsee the blinking devices mounted on his wall and ceiling, or the two on the hallway ceiling just outside his door.

Nor was Widder immediately aware of how to turn the devices off; they did not have an on-off switch. (Ultimately, his attempts to force that opt-out would threaten to derail his career.)

This was a problem for the budding tech ethicist. Widder’s academic work explores how software developers think about the ethical implications of the products that they build; he’s particularly interested in helping computer scientists understand the social consequences of technology. And so Mites was of both professional and personal concern. The same issues of surveillance and informed consent that he helped computer scientists grapple with had found their way into his very office.

CMU isn’t the only university to test out new technologies on campus before sending them into the wider world. University campuses have long been a hotbed for research—with sometimes questionable policies around consent. Timnit Gebru, a tech ethicist and the founder of the Distributed AI Research Institute, cites early research on facial recognition that was built on surveillance data collected by academic researchers. “So many of the problematic data practices we see in industry were first done in the research world, and they then get transported to industry,” she says.

It was through that lens that Widder viewed Mites. “I think nonconsensual data collection for research … is usually unethical. Pervasive sensors installed in private and public spaces make increasingly pervasive surveillance normal, and that is a future that I don’t want to make easier,” he says.

He voiced his concerns in the department’s Slack channel, in emails, and in conversations with other students and faculty members—and discovered that he wasn’t alone. Many other people were surprised to learn about the project, he says, and many shared his questions about what the sensor data would be used for and when collection would start.

“I haven’t been to TCS Hall yet, but I feel the same way … about the Mites,” another department member wrote on Slack in April 2021. “I know I would feel most comfortable if I could unplug the one in my office.”

The researchers say that they followed the university’s required processes for data collection and received sign-off after a review by its institutional review board (IRB) and lawyers. The IRB—which oversees research in which human subjects are involved, as required by US federal regulation—had provided feedback on the Mites research proposal before ultimately approving the project in March. According to a public FAQ about the project, the board determined that simply installing Mites and collecting data about the environment did not require IRB approval or prior consent from occupants of TCS Hall—with an exception for audio data collection in private offices, which would be based on an “opt-in” consent process. Approval and consent would be required for later stages of the project, when office occupants would use a mobile app allowing them to interact with Mites data.

The Mites researchers also ran the project by the university’s general counsel to review whether the use of microphones in the sensors violated Pennsylvania state law, which mandates two-party consent in audio recording. “We have had extensive discussions with the CMU-Office of the General Counsel and they have verified that we are not violating the PA wiretap law,” the project’s FAQ reads.

Overall, the Institute for Software Research, since renamed Software and Societal Systems, was split. Some of its most powerful voices, including the department chair (and Widder’s thesis co-advisor), James Herbsleb, encouraged department members to support the research. “I want to repeat that this is a very important project … if you want to avoid a future where surveillance is routine and unavoidable!” he wrote in an email shortly after the town hall.

“The initial step was to … see how these things behave,” says Herbsleb, comparing the Mites sensors to motion detectors that people might want to test out. “It’s purely just, ‘How well does it work as a motion detector?’ And, you know, nobody’s asked to consent. It’s just trying out a piece of hardware.”

Of course, the system’s advanced capabilities meant that Mites were not just motion detectors—and other department members saw things differently. “It’s a lot to ask of people to have a sensor with a microphone that is running in their office,” says Jonathan Aldrich, a computer science professor, even if “I trust my coworkers as a general principle and I believe they deserve that trust.” He adds, “Trusting someone to be a good colleague is not the same as giving them a key to your office or having them install something in your office that can record private things.” Allowing someone else to control a microphone in your office, he says, is “very much like giving someone else a key.”

As the debate built over the next year, it pitted students against their advisors and academic heroes as well—although many objected in private, fearing the consequences of speaking out against a well-funded, university-backed project.

In the video recording of the town hall obtained by MIT Technology Review, attendees asked how researchers planned to notify building occupants and visitors about data collection. Jessica Colnago, then a PhD student, was concerned about how the Mites’ mere presence would affect studies she was conducting on privacy. “As a privacy researcher, I would feel morally obligated to tell my participant about the technology in the room,” she said in the meeting. While “we are all colleagues here” and “trust each other,” she added, “outside participants might not.”

Attendees also wanted to know whether the sensors could track how often they came into their offices and at what time. “I’m in office [X],” Widder said. “The Mite knows that it’s recording something from office [X], and therefore identifies me as an occupant of the office.” Agarwal responded that none of the analysis on the raw data would attempt to match that data with specific people.

At one point, Agarwal also mentioned that he had gotten buy-in on the idea of using Mites sensors to monitor cleaning staff—which some people in the audience interpreted as facilitating algorithmic surveillance or, at the very least, clearly demonstrating the unequal power dynamics at play.

A sensor system that could be used to surveil workers concerned Jay Aronson, a professor of science, technology, and society in the history department and the founder of the Center for Human Rights Science, who became aware of Mites after Widder brought the project to his attention. University staff like administrative and facilities workers are more likely to be negatively impacted and less likely to reap any benefits, said Aronson. “The harms and the benefits are not equally distributed,” he added.

Similarly, students and nontenured faculty seemingly had very little to directly gain from the Mites project and faced potential repercussions both from the data collection itself and, they feared, from speaking up against it. We spoke with five students in addition to Widder who felt uncomfortable both with the research project and with voicing their concerns.

One of those students was part of a small cohort of 45 undergraduates who spent time at TCS Hall in 2021 as part of a summer program meant to introduce them to the department as they considered applying for graduate programs. The town hall meeting was the first time some of them learned about the Mites. Some became upset, concerned they were being captured on video or recorded.

But the Mites weren’t actually recording any video. And any audio captured by the microphones was scrambled so that it could not be reconstructed.

In fact, the researchers say that the Mites were not—and are not yet—capturing any usable data at all.

For the researchers, this “misinformation” about the data being collected, as Boovaraghavan described it in an interview with MIT Technology Review, was one of the project’s biggest frustrations.

But if the town hall was meant to clarify details about the project, it exacerbated some of that confusion instead. Although a previous interdepartment email thread had made clear that the sensors were not yet collecting data, that was lost in the tense discussion. At some points, the researchers indicated that no data was or would be collected without IRB approval (which had been received the previous month), and at other points they said that the sensors were only collecting “telemetry data” (basically to ensure they were powered up and connected) and that the microphone “is off in all private offices.” (In an emailed statement to MIT Technology Review, Boovaraghavan clarified that “data has been captured in the research teams’ own private or public spaces but never in other occupants’ spaces.”)

For some who were unhappy, exactly what data the sensors were currently capturing was beside the point. It didn’t matter that the project was not yet fully operational. Instead, the concern was that sensors more powerful than anything previously available had been installed in offices without consent. Sure, the Mites were not collecting data at that moment. But at some date still unspecified by the researchers, they could be. And those affected might not get a say.

Widder says the town hall—and follow-up one-on-one meetings with the researchers—actually made him “more concerned.” He grabbed his Phillips screwdriver. He unplugged the Mites in his office, unscrewed the sensors from the wall and ceiling, and removed the ethernet cables from their jacks.

He put his Mite in a plexiglass box on his shelf and sent an email to the research team, his advisors, and the department’s leadership letting them know he’d unplugged the sensors, kept them intact, and wanted to give them back. With others in the department, he penned an anonymous open letter that detailed more of his concerns.

Is it possible to clearly define “privacy”?

The conflict at TCS Hall illustrates what makes privacy so hard to grapple with: it’s subjective. There isn’t one agreed-upon standard for what privacy means or when exactly consent should be required for personal data to be collected—or what even counts as personal data. People have different conceptions of what is acceptable. The Mites debate highlighted the discrepancies between technical approaches to collecting data in a more privacy-preserving way and the “larger philosophical and social science side of privacy,” as Kyle Jones, a professor of library and information science at Indiana University who studies student privacy in higher education, puts it.

Some key issues in the broader debates about privacy were particularly potent throughout the Mites dispute. What does informed consent mean, and under what circumstances is it necessary? What data can actually identify someone, even if it does not meet the most common definitions of “personally identifiable data”? And is building privacy-protecting technology and processes adequate if they’re not communicated clearly enough to users?

For the researchers, these questions had a straightforward answer: “My privacy can’t be invaded if, literally, there’s no data collected about me,” says Harrison.

Even so, the researchers say, consent mechanisms were in place. “The ability to power off the sensor by requesting it was built in from the start. Similarly, the ability to turn on/off any individual sensor on any Mites board was also built in from the get-go,” they wrote in an email.

But though the functionality may have existed, it wasn’t well communicated to the department, as an internal Slack exchange showed. “The one general email that was sent did not provide a procedure to turn them off,” noted Aldrich.

Students we spoke with highlighted the reality that requiring them to opt out of a high-profile research project, rather than giving them the chance to opt in, fails to account for university power dynamics. In an email to MIT Technology Review, Widder said he doesn’t believe that the option to opt out via email request was valid, because many building occupants were not aware of it and because opting out would identify anyone who essentially disagreed with the research.

Aldrich was additionally concerned about the technology itself.

“Can you … reconstruct speech from what they’ve done? There’s enough bits that it’s theoretically possible,” he says. “The [research team] thinks it’s impossible, but we don’t have proof of this, right?”

But a second concern was social: Aldrich says he didn’t mind the project until a colleague outside the department asked not to meet in TCS Hall because of the sensors. That changed his mind. “Do I really want to have something in my office that is going to keep a colleague from coming and meeting with me in my office? The answer was pretty clearly no. However I felt about it, I didn’t want it to be a deterrent for someone else to meet with me in my office, or to [make them] feel uncomfortable,” he says.

The Mites team posted signs around the building—in hallways, common areas, stairwells, and some rooms—explaining what the devices were and what they would collect. Eventually, the researchers added a QR code linking to the project’s 20-page FAQ document. The signs were small, laminated letter-size papers that some visitors said were easy to miss and hard to understand.

“When I saw that, I was just thinking, wow, that’s a very small description of what’s going on,” noted one such visitor, Se A Kim, an undergraduate student who made multiple visits to TCS Hall in the spring of 2022 for a design school assignment to explore how to make visitors aware of data collection in TCS’s public spaces. When she interviewed a number of them, she was surprised by how many were still unaware of the sensors.

One concern repeated by Mites opponents is that even if the current Mites deployment is not set up to collect the most sensitive data, like photos or videos, and is not meant to identify individuals, this says little about what data it might collect—or what that data might be combined with—in the future. Privacy researchers have repeatedly shown that aggregated, anonymized data can easily be de-anonymized.

This is most often the case with far larger data sets—collected, for example, by smartphones. Apps and websites might not have the phone number or the name of the phone’s owner, but they often have access to location data that makes it easy to reverse-engineer those identifying details. (Mites researchers have since changed how they handle data collection in private offices by grouping multiple offices together. This makes it harder to ascertain the behavior of individual occupants.)

Beyond the possibility of reidentification, who exactly can access a user’s data is often unknown with IoT devices—whether by accident or by system design. Incidents abound in which consumer smart-home devices, from baby monitors to Google Home speakers to robot vacuums, have been hacked or their data has been shared without their users’ knowledge or consent.

The Mites research team was aware of these well-known privacy issues and security breaches, but unlike their critics, who saw these precedents as a reason not to trust the installation of even more powerful IoT devices, Agarwal, Boovaraghavan, and Harrison saw them as motivation to create something better. “Alexa and Google Homes are really interesting technology, but some people refuse to have them because that trust is broken,” Harrison says. He felt the researchers’ job was to figure out how to build a new device that was trustworthy from the start.

Unlike the devices that came before, theirs would be privacy-protecting.

Tampering and bullying claims

In the spring of 2021, Widder received a letter informing him he was being investigated for alleged misconduct for tampering with university computing equipment. It also warned him that the way he had acted could be seen as bullying.

Department-wide email threads, shared with MIT Technology Review, hint at just how personal the Mites debate had become—and how Widder had, in the eyes of some of his colleagues, become the bad guy. “People taking out sensors on their own (what’s the point of these deep conversations if we are going to just literally take matters in our hands?) and others posting on social media is *not ethical*,” one professor wrote. (Though the professor did not name Widder, it was widely known that he had done both.)

“I do believe some people felt bullied here, and I take that to heart,” Widder says, though he also wonders, “What does it say about our field if we’re not used to having these kinds of discussions and … when we do, they’re either not taken seriously or … received as bullying?” (The researchers did not respond to questions about the bullying allegations.)

The disciplinary action was dropped after Widder plugged the sensors back in and apologized, but to Aldrich, “the letter functions as a way to punish David for speaking up about an issue that is inconvenient to the faculty, and to silence criticism from him and others in the future,” as he wrote in an official response to Widder’s doctoral review.

Herbsleb, the department chair and Widder’s advisor, declined to comment on what he called a “private internal document,” citing student privacy.

While Widder believes that he was punished for his criticisms, the researchers had taken into account some of those critiques already. For example, the researchers offered to let building occupants turn off the Mites sensors in their offices by asking to opt out via email. But this remained impossible in public spaces, in part because “there’s no way for us to even know who’s in the public space,” the researchers told us.

By February 2023, occupants in nine offices out of 110 had written to the researchers to disable the Mites sensors in their own offices—including Widder and Aldrich.

The researchers point to this small number as proof that most people are okay with Mites. But Widder disagrees; all it proves, he says, is that people saw how he was retaliated against for removing his own Mites sensors and were dissuaded from asking to have theirs turned off. “Whether or not this was intended to be coercive, I think it has that effect,” he says.

“The high-water mark”

On a rainy day last October, in a glass conference room on the fourth floor of TCS Hall, the Mites research team argued that the simmering tensions over their project—the heated and sometimes personal all-department emails, Slack exchanges, and town halls—were a normal part of the research process.

“You may see this discord … through a negative lens; we don’t,” Harrison said.

“I think it’s great that we’ve been able to foster a project where people can legitimately … raise issues with it … That’s a good thing,” he added.

“I’m hoping that we become the high-water mark for how to do this [sensor research] in a very deliberate way,” said Agarwal.

Other faculty members—even those who have become staunch supporters of the Mites project, like Lorrie Cranor, a professor of privacy engineering and a renowned privacy expert—say things could have been done differently. “In hindsight, there should have been more communication upfront,” Cranor acknowledges—and those conversations should have been ongoing so that current students could be part of them. Because of the natural turnover in academia, she says, many of them had never had a chance to participate in these discussions, even though long-standing faculty were informed about the project years ago.

She also has suggestions for how the project could improve. “Maybe we need a Mites sensor in a public area that’s hooked up to a display that gives you a livestream, and you can jump up and down and whistle and do all sorts of stuff in front of it and see what data is coming through,” she says. Or let people download the data and figure out, “What can you reconstruct from this? … If it’s possible to reverse-engineer it and figure something out, someone here probably will.” And if not, people might be more inclined to trust the project.

The devices could also have an on-off switch, Herbsleb, the department chair, acknowledges: “I think if those concerns had been recognized earlier, I’m sure Yuvraj [Agarwal] would have designed it that way.” (Widder still thinks the devices should have an off switch.)

But still, for critics, these actual and suggested improvements do not change the fact that “the public conversation is happening because of a controversy, rather than before,” Aronson says.

Nor do the research improvements take away what Widder experienced. “When I raised concerns, especially early on,” he says, “I was treated as an attention seeker … as a bully, a vandal. And so if now people are suggesting that this has made the process better?” He pauses in frustration. “Okay.”

Besides, beyond any improvements made in the research process at CMU, there is still the question of how the technology might be used in the real world. That commercialized version of the technology might have “higher-quality cameras and higher-quality microphones and more sensors and … more information being sucked in,” notes Aronson. Before something like Mites rolls out to the public, “we need to have this big conversation” about whether it is necessary or desired, he says.

“The big picture is, can we trust employers or the companies that produce these devices not to use them to spy on us?” adds Aldrich. “Some employers have proved they don’t deserve such trust.”

The researchers, however, believe that worrying about commercial applications may be premature. “This is research, not a commercial product,” they wrote in an emailed statement. “Conducting this kind of research in a highly controlled environment enables us to learn and advance discovery and innovation. The Mites project is still in its early phases.”

But there’s a problem with that framing, says Aronson. “The experimental location is not a lab or a petri dish. It’s not a simulation. It’s a building that real human beings go into every day and live their lives.”

Widder, the project’s most vocal critic, can imagine an alternative scenario where perhaps he could have felt differently about Mites, had it been more participatory and “collaborative.” Perhaps, he suggests, the researchers could have left the devices, along with an introduction and instruction booklet, on department members’ desks so they could decide if they wanted to participate. That would have ensured that the research was done “based on the principle of opt-in consent to even have these in the office in the first place.” In other words, he doesn’t think technical features like encryption and edge computing can replace meaningful consent.

Even these sorts of adjustments wouldn’t fundamentally change how Widder feels, however. “I’m not willing to accept the premise of … a future where there are all of these kinds of sensors everywhere,” he says.

The 314 Mites that remain in the walls and ceilings of TCS Hall are, at this point, unlikely to be ripped out. But if the fight over this project may well have wound down, debates about privacy are really just beginning.