All together now: the most trustworthy covid-19 model is an ensemble

Earlier this spring, a paper studying covid forecasting appeared on the medRxiv preprint server with an authors’ list running 256 names long.

At the end of the list was Nicholas Reich, a biostatistician and infectious-disease researcher at the University of Massachusetts, Amherst. The paper reported results of a massive modeling project that Reich has co-led, with his colleague Evan Ray, since the early days of the pandemic. The project began with their attempts to compare various models online making short-term forecasts about covid-19 trajectories, looking one to four weeks ahead, for infection rates, hospitalizations, and deaths. All used varying data sources and methods and produced vastly divergent forecasts.

“I spent a few nights with forecasts on browsers on multiple screens, trying to make a simple comparison,” says Reich (who is also a puzzler and a juggler). “It was impossible.”

In an effort to standardize an analysis, in April 2020, Reich’s lab, in collaboration with US Centers for Disease Control and Prevention, launched the “COVID-19 Forecast Hub.” The hub aggregates and evaluates weekly results from many models and then generates an “ensemble model.” The upshot of the study, Reich says, is that “relying on individual models is not the best approach. Combining or synthesizing multiple models will give you the most accurate short-term predictions.”

“The sharper you define the target, the less likely you are to hit it.”

Sebastian Funk

The purpose of short-term forecasting is to consider how likely different trajectories are in the immediate future. This information is crucial for public health agencies in making decisions and implementing policy, but it’s hard to come by, especially during a pandemic amid ever-evolving uncertainty.

Sebastian Funk, an infectious disease epidemiologist at the London School of Hygiene & Tropical Medicine, borrows from the great Swedish physician Hans Rosling, who in reflecting on his experience helping the Liberian government fight the 2014 Ebola epidemic, observed: “We were losing ourselves in details … All we needed to know is, are the number of cases rising, falling, or leveling off?”

“That in itself is not always a trivial task, given that noise in different data streams can obscure true trends,” says Funk, whose team contributes to the US hub, and this past March launched a parallel venture, the European COVID-19 Forecast Hub, in collaboration the European Centre for Disease Prevention and Control.

Trying to hit the bull’s eye

To date, the US COVID-19 Forecast Hub has included submissions from about 100 international teams, in academia, industry, and government, as well as independent researchers, such as the data scientist Youyang Gu. Most teams try to mirror what’s happening in the world with a standard epidemiological framework. Others use statistical models that crunch numbers looking for trends, or deep learning techniques; some mix-and-match.

Every week, teams each submit not only a point forecast predicting a single number outcome (say, that in one week there will be 500 deaths). They also submit probabilistic predictions that quantify the uncertainty by estimating the likelihood of the number of cases or deaths at intervals, or ranges, that get narrower and narrower, targeting a central forecast. For instance, a model might predict that there’s a 90 percent probability of seeing 100 to 500 deaths, a 50 percent probability of seeing 300 to 400, and 10 percent probability of seeing 350 to 360.

“It’s like a bull’s eye, getting more and more focused,” says Reich.

Funk adds: “The sharper you define the target, the less likely you are to hit it.” It’s fine balance, since an arbitrarily wide forecast will be correct, and also useless. “It should be as precise as possible,” says Funk, “while also giving the correct answer.”

In collating and evaluating all the individual models, the ensemble tries to optimize their information and mitigate their shortcomings. The result is a probabilistic prediction, statistical average, or a “median forecast.” It’s a consensus, essentially, with a more finely calibrated, and hence more realistic, expression of the uncertainty. All the various elements of uncertainty average out in the wash.

The study by Reich’s lab, which focused on projected deaths and evaluated about 200,000 forecasts from mid-May to late-December 2020 (an updated analysis with predictions for four more months will soon be added), found that the performance of individual models was highly variable. One week a model might be accurate, the next week it might be way off. But, as the authors wrote, “In combining the forecasts from all teams, the ensemble showed the best overall probabilistic accuracy.”

And these ensemble exercises serve not only to improve predictions, but also people’s trust in the models, says Ashleigh Tuite, an epidemiologist at the Dalla Lana School of Public Health at the University of Toronto. “One of the lessons of ensemble modeling is that none of the models is perfect,” Tuite says. “And even the ensemble sometimes will miss something important. Models in general have a hard time forecasting inflection points—peaks, or if things suddenly start accelerating or decelerating.”

“Models are not oracles.”

Alessandro Vespignani

The use of ensemble modeling is not unique to the pandemic. In fact, we consume probabilistic ensemble forecasts every day when Googling the weather and taking note that there’s 90 percent chance of precipitation. It’s the gold standard for both weather and climate predictions.

“It’s been a real success story and the way to go for about three decades,” says Tilmann Gneiting, a computational statistician at the Heidelberg Institute for Theoretical Studies and the Karlsruhe Institute of Technology in Germany. Prior to ensembles, weather forecasting used a single numerical model, which produced, in raw form, a deterministic weather forecast that was “ridiculously overconfident and wildly unreliable,” says Gneiting (weather forecasters, aware of this problem, subjected the raw results to subsequent statistical analysis that produced reasonably reliable probability of precipitation forecasts by the 1960s).

Gneiting notes, however, that the analogy between infectious disease and weather forecasting has its limitations. For one thing, the probability of precipitation doesn’t change in response to human behavior—it’ll rain, umbrella or no umbrella—whereas the trajectory of the pandemic responds to our preventative measures.

Forecasting during a pandemic is a system subject to a feedback loop. “Models are not oracles,” says Alessandro Vespignani, a computational epidemiologist at Northeastern University and ensemble hub contributor, who studies complex networks and infectious disease spread with a focus on the “techno-social” systems that drive feedback mechanisms. “Any model is providing an answer that is conditional on certain assumptions.”

When people process a model’s prediction, their subsequent behavioral changes upend the assumptions, change the disease dynamics and render the forecast inaccurate. In this way, modeling can be a “self-destroying prophecy.”

And there are other factors that could compound the uncertainty: seasonality, variants, vaccine availability or uptake; and policy changes like the swift decision from the CDC about unmasking. “These all amount to huge unknowns that, if you actually wanted to capture the uncertainty of the future, would really limit what you could say,” says Justin Lessler, an epidemiologist at the Johns Hopkins Bloomberg School of Public Health, and a contributor to the COVID-19 Forecast Hub.

The ensemble study of death forecasts observed that accuracy decays, and uncertainty grows, as models make predictions farther into the future—there was about two times the error looking four weeks ahead versus one week (four weeks is considered the limit for meaningful short-term forecasts; at the 20-week time horizon there was about five times the error).

“It’s fair to debate when things worked and when things didn’t.”

Johannes Bracher

But assessing the quality of the models—warts and all—is an important secondary goal of forecasting hubs. And it’s easy enough to do, since short-term predictions are quickly confronted with the reality of the numbers tallied day-to-day, as a measure of their success.

Most researchers are careful to differentiate between this type of “forecast model,” aiming to make explicit and verifiable predictions about the future, which is only possible in the short- term; versus a “scenario model,” exploring “what if” hypotheticals, possible plotlines that might develop in the medium- or long-term future (since scenario models are not meant to be predictions, they shouldn’t be evaluated retrospectively against reality).

During the pandemic, a critical spotlight has often been directed at models with predictions that were spectacularly wrong. “While longer-term what-if projections are difficult to evaluate, we shouldn’t shy away from comparing short-term predictions with reality,” says Johannes Bracher, a biostatistician at the Heidelberg Institute for Theoretical Studies and the Karlsruhe Institute of Technology, who coordinates a German and Polish hub, and advises the European hub. “It’s fair to debate when things worked and when things didn’t,” he says. But an informed debate requires recognizing and considering the limits and intentions of models (sometimes the fiercest critics were those who mistook scenario models for forecast models).

“The big question is, can we improve?”

Nicholas Reich

Similarly, when predictions in any given situation prove particularly intractable, modelers should say so. “If we have learned one thing, it’s that cases are extremely difficult to model even in the short run,” says Bracher. “Deaths are a more lagged indicator and are easier to predict.”

In April, some of the European models were overly pessimistic and missed a sudden decrease in cases. A public debate ensued about the accuracy and reliability of pandemic models. Weighing in on Twitter, Bracher asked: “Is it surprising that the models are (not infrequently) wrong? After a 1-year pandemic, I would say: no.” This makes it all the more important, he says, that models indicate their level of certainty or uncertainty, that they take a realistic stance about how unpredictable cases are, and about the future course. “Modelers need to communicate the uncertainty, but it shouldn’t be seen as a failure,” Bracher says.

Trusting some models more than others

As an oft-quoted statistical aphorism goes, “All models are wrong, but some are useful.” But as Bracher notes, “If you do the ensemble model approach, in a sense you are saying that all models are useful, that each model has something to contribute”—though some models may be more informative or reliable than others.

Observing this fluctuation prompted Reich and others to try “training” the ensemble model—that is, as Reich explains, “building algorithms that teach the ensemble to ‘trust’ some models more than others and learn which precise combination of models works in harmony together.” Bracher’s team now contributes a mini-ensemble, built from only the models that have performed consistently well in the past, amplifying the clearest signal.

“The big question is, can we improve?” Reich says. “The original method is so simple. It seems like there has to be a way of improving on just taking a simple average of all these models.” So far, however, it is proving harder than expected—small improvements seem feasible, but dramatic improvements may be close to impossible.

A complementary tool for improving our overall perspective on the pandemic beyond week-to-week glimpses is to look further out on the time horizon, four to six months, with those “scenario modeling.” Last December, motivated by the surge in cases and the imminent availability of the vaccine, Lessler and collaborators launched the COVID-19 Scenario Modeling Hub, in consultation with the CDC.

Scenario models put bounds on the future based on well-defined “what if” assumptions—zeroing in on what are deemed to be important sources of uncertainty and using them as leverage points in charting the course ahead.

To this end, Katriona Shea, a theoretical ecologist at Penn State University and a scenario hub coordinator, brings to the process a formal approach to making good decisions in an uncertain environment—drawing out the researchers via “expert elicitation,” aiming for a diversity of opinions, with a minimum of bias and confusion. In deciding what scenarios to model, the modelers discuss what might be important upcoming possibilities, and they ask policy makers for guidance about what would be helpful.

They also consider the broader chain of decision-making that follows projections: decisions by business owners around reopening, and decisions by the general public around summer vacation; decisions triggering levers that can be pulled in hopes of changing the pandemic’s course, others simply informing what viable strategies can be adopted to cope.

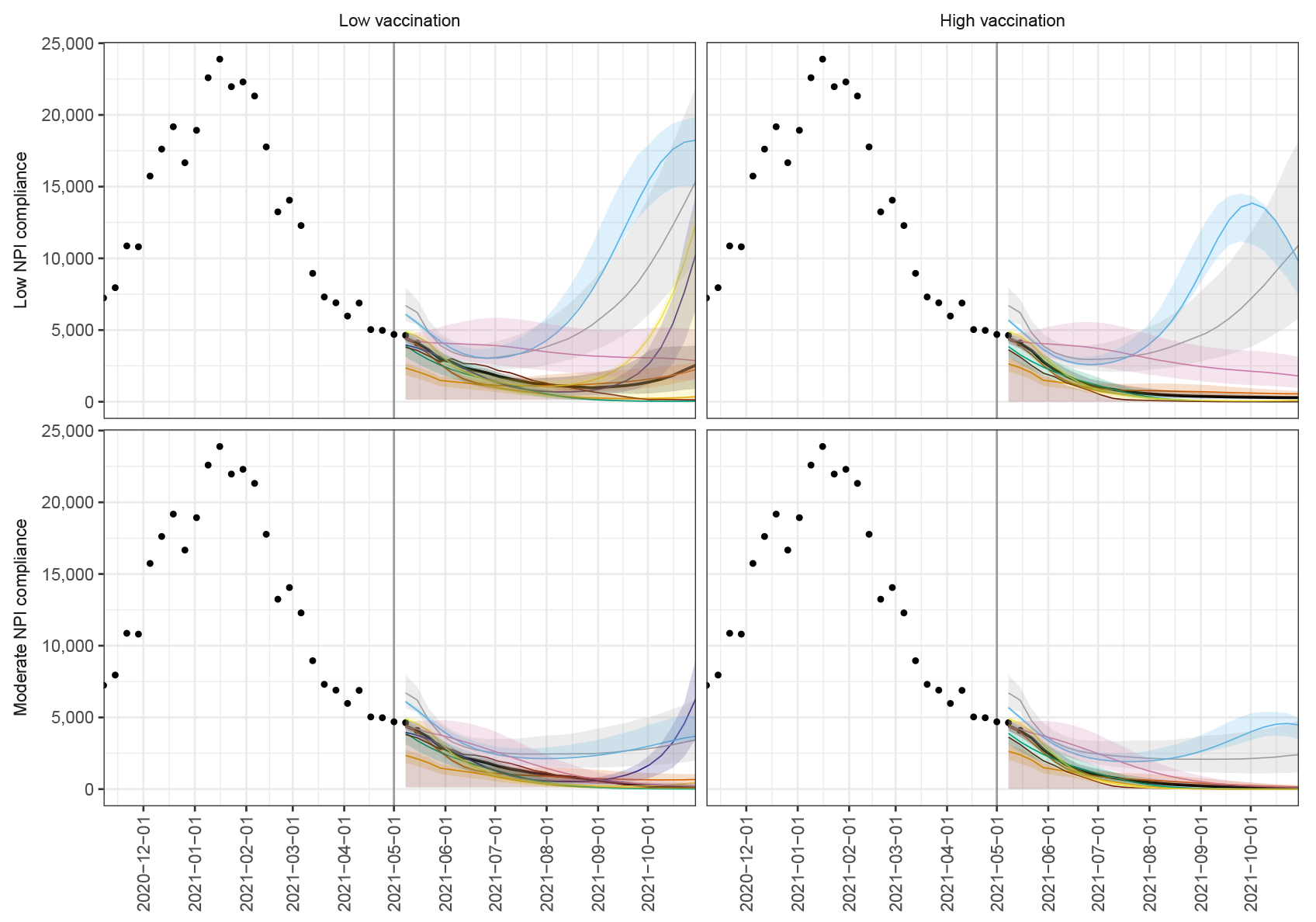

The hub just finished its fifth round of modeling with the following scenarios: What are the case, hospitalization and death rates from now through October if the vaccine uptake in the US saturates nationally at 83 percent? And what if vaccine uptake is 68 percent? And what are the trajectories if there is a moderate 50 percent reduction in non-pharmaceutical interventions such as masking and social distancing, compared with an 80 percent reduction?

With some of the scenarios, the future looks good. With the higher vaccination rate and/or sustained non-pharmaceutical interventions such as masking and social distancing, “things go down and stay down,” says Lessler. With the opposite extreme, the ensemble projects a resurgence in the fall—though the individual models show more qualitative differences for this scenario, with some projecting that cases and deaths stay low, while others predict far larger resurgences than the ensemble.

The hub will model a few more rounds yet, though they’re still discussing what scenarios to scrutinize—possibilities include more highly transmissible variants, variants achieving immune escape, and the prospect of waning immunity several months after vaccinations.

We can’t control those scenarios in terms of influencing their course, Lessler says, but we can contemplate how we might plan accordingly.

Of course, there’s only one scenario that any of us really want to mentally model. As Lessler puts it, “I’m ready for the pandemic to be over.”