A feminist internet would be better for everyone

It’s April 13, 2025. Like most 17-year-olds, Maisie grabs her phone as soon as she wakes up. She checks her apps in the same order every morning: Herd, Signal, TikTok.

Herd started out as a niche social network aimed at girls, but everyone’s on it these days, even the boys. Maisie goes to her personal page and looks at what she’s pinned there: photos of her dog, her family, her school science project. It’s like a digital scrapbook of all the things she loves, all in one place. She reads comments from her friends and looks at what they’ve added to their own pages. She doesn’t really go on Facebook—only grandparents are still using that—or Twitter. Herd is just … nicer. No like counts. No follower metrics. No shouty strangers.

She checks Signal. Signal’s been popular since the Great WhatsApp Exodus of 2023, when WhatsApp announced it would share yet more data with Facebook, and users fled to more secure, encrypted alternatives.

Next, TikTok. She watches a video of some girls dancing, swipes up, sees a cat jumping through a hoop, swipes up, reads an explainer on volcanoes. TikTok doesn’t collect so much data these days—nothing on her location or her keystrokes. Much of that sort of data collection is illegal now, thanks to the Data Protection Act pushed through by lawmakers in the US three years ago over Big Tech’s lobbying.

Maisie’s running out of time. She needs to get ready for school, but she thinks about checking Instagram. Though she did get a weird message from a guy on there recently, she used the app’s simple, one-click process to report him and knows she won’t be hearing from him again. Instagram has taken harassment much more seriously these past couple of years. There are so many competitors and choices for where to spend your time online—people won’t bother staying in a place that doesn’t make them feel good about themselves.

__________________________________________________________________________

This vision of an internet free from harassment, hate, and misogyny might seem far-fetched, particularly if you’re a woman. But a small, growing group of activists believe the time has come to reimagine online spaces in a way that centers women’s needs rather than treating them as an afterthought. They aim to force tech companies to detoxify their platforms, once and for all, and are spinning up brand-new spaces built on women-friendly principles from the start. This is the dream of a “feminist internet.”

The movement might seem naïve in a world where many have given up on the idea of technology as a force for good. But aspects of the feminist internet are already taking shape. Achieving this vision would require us to radically overhaul the way the web works. But if we build it, it won’t just be a better place for women; it will be better for everyone.

Quantifying hatred

In The Female Eunuch, one of feminism’s cornerstone texts, Germaine Greer wrote in 1970 that “women have very little idea of how much men hate them.”

Thanks to the internet, as Arzu Geybulla will tell you, they now know only too well.

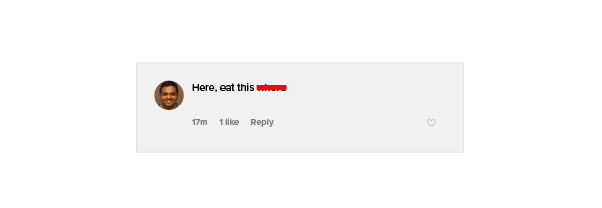

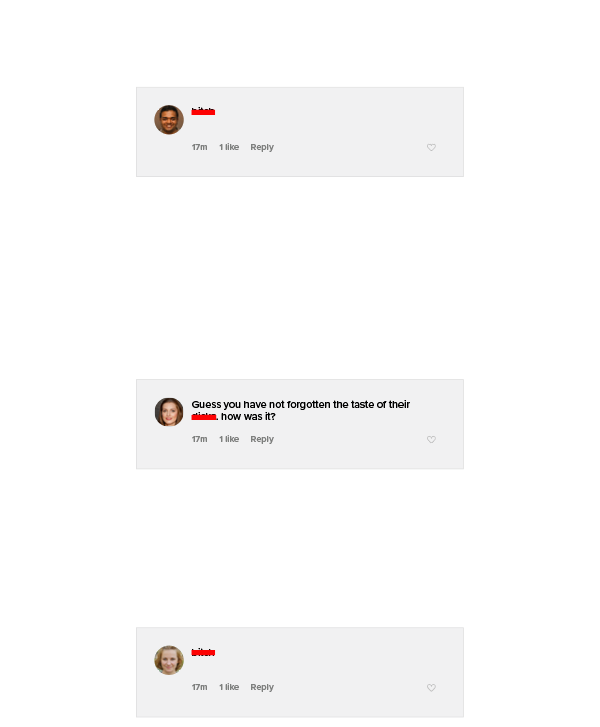

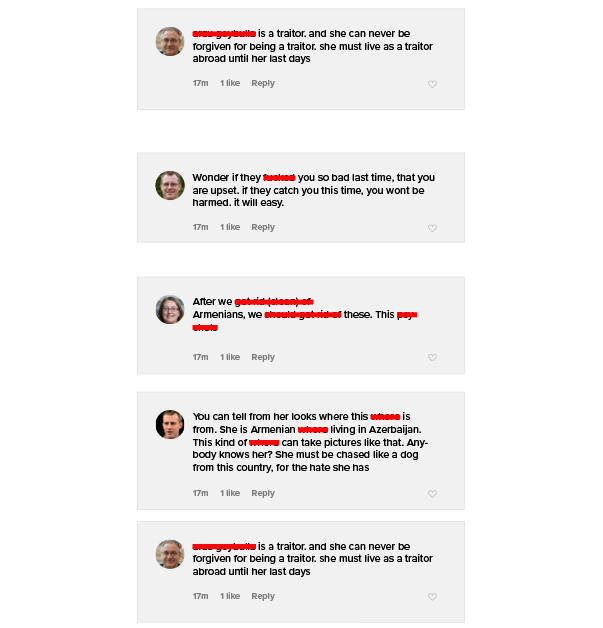

As an Azerbaijani journalist writing for an Armenian newspaper, Geybulla became a target for online trolls because she was perceived as a “traitor” to her country of birth. (Azerbaijan and Armenia have a long history of animosity, which broke out into open warfare last year.) Her first death threat came in 2014, after she endured days of violent, sexist abuse online. “They said I had three days left. They told me where I’d be buried,” she says.

She also knows the abuse was worse because she’s a woman.

“The language is very different,” she says. “The predominant theme is violating my body and punishing me—messages saying gang-rape her, deport her, shoot her, silence her, keep her mouth shut, hang her.”

Women have always been particularly subject to abuse online. They’re attacked not simply because of what they say or do, but because of their gender. If they’re people of color or LGBTQ+, or have a public-facing job as a politician or journalist, it’s worse. The same sexist message runs through much of the vitriol: “Stop speaking, or else.”

The pandemic has exacerbated the problem as work, play, health, dating, and much else besides have been dragged into virtual-only environments. Half of women and nonbinary people surveyed by the UK charity Glitch reported experiencing online abuse last year, the vast majority of it on Twitter. A recent report by the Pew Research Center found that 33% of women under 35 have been sexually harassed online; in 2017, that figure was 21%.

Sometimes, the abuse is part of a coordinated campaign. That’s where the “manosphere” comes in. The informal term refers to a loose collection of websites and online groups dedicated to attacking feminists and women more generally.

Angry men gather on forums like Reddit and 4Chan, and websites like A Voice for Men. Occasionally, they identify and agree upon targets for trolling. During the controversy known as Gamergate, in 2014, several women in the video game industry faced a coordinated doxxing campaign (in which attackers found and posted their personal details like phone numbers and addresses) and a barrage of rape and death threats.

The manosphere is not an abstract, virtual threat: it can have real-world consequences. It’s where Faisal Hussain spent hours self-radicalizing before he embarked on a shooting spree, killing a woman and a girl and injuring 14 other people in Toronto in 2018. On his computer, police found a copy of a manifesto by Elliot Rodger—another man who had been deeply embedded in the manosphere, and who ended up going on a murderous rampage in Isla Vista, California, in 2014. Rodger’s manifesto said he was taking revenge on women for rejecting him, and attacking sexually active men out of envy.

To be a woman online is to be highly visible and a direct target of that hate, says Maria Farrell, a tech policy expert and former director of the Open Rights Group.

“My first rape and death threats came in 2005,” she says. Farrell wrote a blog post criticizing the US response to Hurricane Katrina as racist and was subsequently inundated with abuse. Since then, she says, the situation has worsened: “A decade or so ago, you had to say something that attracted opprobrium. That’s not the case now. Now it’s just every day.” She is extremely careful about which services she uses, and takes great care never to share her location online.

Death threats and online abuse aren’t the only online issues that disproportionately affect women, though. There are also less tangible harms, like algorithmic discrimination. For example, try Googling the terms “school boy” and “school girl.” The image results for boys are mostly innocuous, whereas the results for girls are dominated by sexualized imagery. Google ranks these results on the basis of factors such as what web page an image appears on, its alt text or caption, and what it contains, according to image recognition algorithms. Bias creeps in via two routes: the image recognition algorithms themselves are trained on sexist images and captions from the internet, and web pages and captions talking about women are skewed by the pervasive sexism that’s built up over decades online. In essence, the internet is a self-reinforcing misogyny machine.

For years, Facebook has trained its machine-learning systems to spot and scrub out any images that smack of sex or nudity, but these algorithms have been repeatedly reported to be overzealous, censoring photos of plus-size women, or women breastfeeding their babies. The fact that the company did this while simultaneously allowing hate speech to run rampant on its platform is not lost on activists. “This is what happens when you let Silicon Valley bros set the rules,” says Carolina Are, an algorithmic bias researcher at City, University of London.

How we got here

Every woman I spoke to for this story said she had experienced greater volumes of harassment in recent years. One likely culprit is the design of social media platforms, and specifically their algorithmic underpinnings.

In the early days of the web, tech companies made a choice that their services would be mostly supported by advertising. We simply weren’t given the option to subscribe to Google, Facebook, or Twitter. Instead, the currency these companies crave is eyeballs, clicks, and comments, all of which generate data they can package and use to market their users to the real customers: advertisers.

“Platforms try to maximize engagement—enragement, really—through algorithms that drive more clicks,” says Farrell. Virtually every mainstream tech platform prizes engagement above all else. That privileges incendiary content. Charlotte Webb, who cofounded the activist collective Feminist Internet in 2017, puts it bluntly: “Hate makes money.” Facebook made a profit of $29 billion in 2020.

The ignorance and myopia that underpinned techno-optimism in the 1990s were part of the problem, says Mar Hicks, a tech historian at the Illinois Institute of Technology.

In fact, many of the internet’s early pioneers believed it could become a neutral virtual world free from the messy politics and complications of the physical one. In 1996, John Perry Barlow, cofounder of the Electronic Frontier Foundation, wrote the movement’s sacred text, “A Declaration of the Independence of Cyberspace.” It included the line “We are creating a world that all may enter without privilege or prejudice accorded by race, economic power, military force, or station of birth.” Gender is not mentioned anywhere in the declaration.

“The whole idea of the early internet was that it would revolutionize power relationships and democratize things,” says Hicks. “That was always a foolish, ahistorical view. It was not even what was happening at the time.”

In fact, just as Barlow’s declaration was published, women were fleeing tech jobs. Women had been at the core of the tech industry’s early development but were gradually edged out over time just as pay and prestige increased, as Bloomberg Technology anchor Emily Chang explained in her 2018 book Brotopia. The high point was 1984, when about 35% of the US tech workforce was female. Now it’s less than 20%, and that number hasn’t budged in a decade. And if you look at the upper echelons of tech company management—the boards and the directors—women are even rarer.

That’s a problem, because it means that women’s voices were—and in many cases still are—largely overlooked in the design and development of most online services. Rather than upending the power imbalance between men and women, in many ways the tech boom cemented them more deeply in place.

Reinventing the internet

So what would a “feminist internet” look like?

There’s no single vision or approved definition. The closest thing the movement has to a set of commandments are 17 principles published in 2016 by the Association for Progressive Communications (APC), a sort of United Nations for online activist groups. It has 57 organizational members who campaign on everything from climate change to labor rights to gender equality. The principles were the outcome of three days of open, unstructured talks between nearly 100 feminists in 2014, plus additional workshops with activists, digital rights specialists, and feminist academics.

Many of the principles relate to redressing the vast power imbalance between tech companies and ordinary people. Feminism is obviously about equality between men and women, but in essence it is about power—who gets to wield it, and who gets exploited. Building a feminist internet, then, is in part about redistributing that power away from Big Tech and into the hands of individuals—especially women, who have historically had less of a say.

The principles state that a feminist internet would be less hierarchical. More cooperative. More democratic. More consensual. More customizable and suited to individual needs, rather than imposing a one-size-fits-all model.

For example, the online economy would be less reliant on scooping up our data and using it to sell advertising. It would do more to address hatred and harassment online, while preserving freedom of expression. It would protect people’s privacy and right to anonymity. These are all issues that affect every internet user, but the consequences are often greater for women when things go awry.

To live up to these principles, companies would have to give more control and decision-making power to users. This would mean not only that individuals would be able to adjust things like our security and privacy settings (with the strongest privacy as the default), but that we could act collectively—by proposing and voting on new features, for example. Widespread harassment would not be seen as a tolerable price women have to pay, but as an unacceptable sign of failure. People would be more aware of their data rights as individuals, and more willing to take collective action against tech companies that abused those rights. They’d be able to port their data easily from one company to another or revoke access to it altogether.

“Our basic premise is that we love the internet, but we want to question the money, the objectives, and the people running the spaces we all use,” says Erika Smith, who has been a member of the APC women’s rights program since 1994.

One starting point is just to learn to view the internet through a feminist lens, looking at every service and product and asking: How could this be used to harm women?

Tech companies could incorporate this kind of gender impact assessment into the decision-making process before any new product is launched. Engineers would need to ask how the product might be abused by people seeking to harm women. For example, could it be used for stalking or domestic abuse, or could it lead to more harassment online?

Gender impact assessments alone would not fix the many problems women face online, but they would at least introduce a bit of necessary friction and force teams to slow down and think about the societal impact of what they’re building.

Again, these assessments would not just benefit women. The failure to think about how a product will affect women makes those products worse for everyone. A perfect example comes from the fitness tracking company Strava. In 2018, the company realized that its service could be used to identify individual military or intelligence personnel: security experts had connected the dots from users’ running routes to known US bases overseas. But if Strava had listened to women, it would already have known about this risk, says Farrell.

“Feminists warned them that it could be used to stalk and track individual women by looking at their running routes,” she says. “That’s why having a feminist eye on the internet is such an advantage, because it knows that what can be abused will be abused.”

How we fix it

Feminist technologists have spent years telling tech companies what they’re doing wrong, and have been roundly ignored. Now they’re taking matters into their own hands. Activists are building products, running campaigns, and convening events to tackle virtually every aspect of sexism online.

If we manage to create a feminist internet, it will be thanks at least partly to the sheer force of will among people involved in this movement.

Take Tracy Chou.

She grew up in Silicon Valley, went to Stanford University to study computer science, and then worked as a software engineer at Quora, Pinterest, and Facebook. Like a lot of young women, she spent a lot of her time on social media. But eventually, she got sick of being constantly interrupted by misogynistic and racist comments, a problem she says ramped up over time—especially after she started advocating for more diversity in Silicon Valley.

Occasionally the harassment even spilled over into physical threats. A man who had been stalking her online flew to San Francisco twice and showed up where she was staying, prompting her to seek advice from a private security firm. The police had told her to “tell us when something happens.”

“For most people, there really isn’t much we can do about the harassment, apart from getting a therapist,” she says, rolling her eyes.

But Chou isn’t most people. She used her engineering skills to build a tool called Block Party, which aims to make Twitter more bearable by helping people filter out abuse. All the replies and mentions you don’t want to see are put in a “lockout folder” you or an appointed friend can check at a time of your own choosing (or not at all). Its early users have predominantly been women who face rampant online abuse, Chou says: reporters, activists, and scientists working on covid-19. But mostly, she made it for herself: “I’m doing this because I have to deal with online harassment and I don’t like it. It’s solving my own problem.”

Since Chou started building Block Party, at the end of 2018, Twitter has adopted one or two of its features. For example, it now lets people limit who can reply to their tweets.

Some activists aren’t satisfied with just dealing with abuse at this late stage of the process. They want us to question some of the underlying assumptions that lead to such harassment in the first place.

Take voice assistants and smart speakers. Over one-third of Americans routinely use smart speakers. Millions of us are talking to voice assistants every single day. In almost every case, we’re interacting with a female voice. And that’s a problem, because it perpetuates a stereotype of passive, agreeable, eager-to-please femininity that harks back to the 1950s housewife, says Yolande Strengers, an associate professor and digital sociologist at Monash University. “You can be abusive to them, and they can’t fight back,” she says.

A 2019 United Nations report concluded that smart speakers reinforce harmful gender stereotypes. It called for companies to stop making digital assistants female by default and explore ways to make them sound “genderless.” One project, called Q, set out to do just that. And if you listen for yourself, you’ll hear that it has done a pretty convincing job. Q was created by Virtue, an agency set up by the media company Vice. The team consulted linguists to define the parameters for a “male” and “female” voice and figure out where they overlap. Then they recorded lots of voices, altered them, and tested them on thousands of people to identify the most gender neutral. They’ve already done the hard work. If Apple or Amazon wanted to, they could adopt it tomorrow.

Q isn’t the only project trying to address the issues at a root level. Caroline Sinders, a machine-learning researcher and artist, has built an open, free tool kit that helps people interrogate every step of the AI process and analyze whether it is feminist or intersectional (taking into account overlapping issues like structural racism, sexism, homophobia, and classism) and whether it has any creeping bias. Superrr Lab in Berlin is a feminist technology collective working on, among other things, exploring utopian ideas about how to ensure that future digital products better reflect the needs of women and marginalized groups.

But some activists aren’t satisfied with just improving existing platforms.

Mady Dewey and Ali Howard—who both work at Google—plan to launch their own social network, Herd, in April. They want to create a nontoxic online experience for women and girls, but they hope that it will be better for all users. They have overhauled the core design features we all take for granted in social media, especially “likes” and comments, which prize engagement and help encourage abuse.

Instead of opening the app and landing on a feed, people arrive on their own profile—a sort of “digital garden” where they can store photos, thoughts, and things that make them happy. There are no likes. There are limits on how many times people can comment, to prevent trolling campaigns. The aim is to cultivate a kinder, friendlier, calmer environment. The cofounders say they are essentially building Herd for their insecure, Instagram-scrolling 15-year-old selves. “We have big dreams for this, but to be honest, we’re primarily building it for us. We’d rather make a platform that means a lot to a smaller group than nothing to millions,” says Dewey.

So what’s stopping us from pushing projects like this into the mainstream?

Cash. Or more precisely, a lack of it. Women have never received more than 3% of US venture capital money, according to Pitchbook. It’s surely no coincidence that venture capital is still mostly a boys’ club—only 14% of decision-makers at VC firms are women, according to Axios research. “Just imagine what I could do with $0.7 billion of the $27.7 billion Slack just sold for. Or even just 0.7% of that sum,” says Suw Charman-Anderson, a diversity-in-tech advocate who founded Ada Lovelace Day, a celebration of the first computer programmer, in 2009.

Thinking bigger

But a patchwork approach of individual projects is going to take years to deliver results, if indeed it ever can. Some activists think the problem needs to be addressed from the top down.

Many are hopeful that the coming push by US politicians to rein in and regulate Big Tech will benefit women specifically. AI policy expert Mutale Nkonde points to the Algorithmic Accountability Act and the No Biometric Barriers Act as examples. Respectively, these laws would force companies to check their algorithms for bias, including gender discrimination, and ban the use of facial recognition in public housing. Neither law passed in the last Republican-controlled session of Congress, but Biden’s presidency gives her cause for hope.

“We have someone we can pressurize now, someone we can persuade,” she says. Biden’s administration has signaled it plans to address online harassment with a specific focus on sexist abuse, although the concrete details have not yet been released.

Activists want lawmakers to focus on issues like algorithmic oversight and accountability, and push platforms to move away from the sort of rapid, harmful, engagement-driven growth we’ve seen so far. Legal content moderation requirements could help, as could more cooperation between the tech companies on issues of online abuse.

Harassment is a cross-platform problem, after all. Once trolls have identified a target, they will comb through that person’s online life, looking at every single social media profile, email address, and online post before unleashing hell. “They will find any surface area they can to try to attack you,” Chou says. The barriers to women trying to fight back against abuse are vast. The reporting process differs from Twitter to Facebook to TikTok, complicating an already time-consuming task. “It is too much to try to catch all the abuse on all your accounts, all at once,” says Geybulla. “And this isn’t how I want to spend what little free time I have.”

This could be addressed, in part, by building a single, standardized process for reporting abuse that all the big tech platforms agree to use. The World Wide Web Foundation has been running online workshops on how to address gender-based online violence for the past several months, and the fact that there’s no way to deal with cross-platform harassment right now emerged as one of the biggest barriers women face, says Azmina Dhrodia, senior gender policy manager at the foundation.

The foundation has also been consulting with Facebook, Twitter, Google, YouTube, and TikTok on this issue and says the companies are expected to make “major commitments” at the Generation Equality Forum, a UN-sponsored gathering for gender equality set to be held in Paris in late June.

Ultimately, women have the right to be online without fear of harassment. Think of all the women who have not set up online retailers, or started blogging, or run for office, or created a YouTube channel, because they worry they will be harassed or even physically harmed. When women are chased off platforms, it becomes a civil rights issue.

But it’s also in all of our best interests to protect one another. A world in which everyone can benefit equally from the web will lead to a better mix of voices and opinions we hear, an increase in the information we can access and share, and a more meaningful experience online for everyone.

Perhaps we’re at a tipping point. “I am optimistic that we can undo some of these blatant harms, and blatant abrogation of companies’ duties to the public and consumers,” Hicks says. “We’ve seen the automobile industry and how Ralph Nader got seatbelts—we saw how Detroit needed to be regulated. We are at that point with Silicon Valley.”